Old Rasch Forum - Rasch on the Run: 2012

Rasch Forum: 2006

Rasch Forum: 2007

Rasch Forum: 2008

Rasch Forum: 2009

Rasch Forum: 2010

Rasch Forum: 2011

Rasch Forum: 2013 January-June

Rasch Forum: 2013 July-December

Rasch Forum: 2014

Current Rasch Forum

65. Some question on equating and linking method

iyliajamil December 14th, 2012, 3:07am:

Hi,

i,m iylia, I am a Phd student from University Science of Malaysia.

My research is on developing Computer Adaptive Test for Malaysian Polytechnic diploma level student. To develop CAT one of the requirement is a Calibrated Item Bank. I am interested in Rasch Model and tend to use it to equate and link the question in the item bank.

First, about item bank, is it necessary to come out with more than 1,000 item to generate the item bank? i have read somewhere it need at least 12 time more question than the real test set. Let say we want i set of item containing 30 question so we must prepare 360 question for item bank.

Second, when we assign our item to a few set of questions, what is the minimum respondent need to answer to each set if we want to equate it using Rasch Model? Is 30 respondent enough?

Thirdly, I have read some of the journal regarding Equating and Linking Method saying that it needs a set of anchor item to equate a few set of test item.

The question is?

Is there a rule saying the minimum number of anchor item?

While reading i came across some say 2 item, some say 5 item, some say 10 item.what is your opinion as an expert in Rasch?

Thats all for now, really hope to get feedback from you.

Mike.Linacre:

Thank you for your questions, iyliajamil.

There is no minimum size to an item bank, and there is no minimum test length, and there is no need for anchor items.

You are developing a CAT test. There will be no anchor items. You don't want anchor items, because those items will be over-used, and so are likely to become exposed.

If you are administering a high-stakes CAT test, then persons with abilities near crucial pass-fail points will be administered 200 items. Those far from pass-fail points (usually very high or very low ability) will be administered about 30 items, at least enough for complete coverage of the relevant content areas.

If you are administering a high-stakes CAT test with 200 items administered, and this is administered to 10,000 students, then you will need a very large item bank (thousands of items) in order to avoid over-exposure of items with difficulties near pass-fail points.

Or, if your test is low-stakes, and item exposure is not a problem, and low measurement precision is acceptable, then the item bank can be small, and only a few test items need to be administered. See https://www.rasch.org/rmt/rmt52b.htm

You may also want to look at www.rasch.org/memo69.pdf

iyliajamil:

thank you for your swift reply.

if we don't have anchor item, how to link a test to another test?

to use CAT we need an item bank that have the difficulty level.

from what i know, in order to get the difficulty level of each question using rasch...student must answer to the question. let say, i have a 500 question to pun in an item bank. it is impossible to get a student to answer all the 500 question. so i need to break it to a few set of item containing less item, example 50 question to 1 set.so i have 10 set of question that will be answered by 10 different group of student.

can you explain this to me?

thank you.

Mike.Linacre:

There are three stages in this process, iyliajamil.

1) Constructing the starting item bank. This is done once.

You have 500 questions you want to put into your bank. You can only administer 50 questions to one person.

So construct 11 tests with this type of linked design:

Test 1: 5 items in common with Test 11 + 40 items + 5 items in common with Test 2

Test 2: 5 items in common with Test 1 + 40 items + 5 items in common with Test 3

Test 3: 5 items in common with Test 2 + 40 items + 5 items in common with Test 4

....

Test 10: 5 items in common with Test 9 + 40 items + 5 items in common with Test 11

Test 11: 5 items in common with Test 10 + 40 items + 5 items in common with Test 1

These 11 tests have 495 items, so adjust the numbers if there are exactly 500 items.

Administer the 11 tests to at least 30 on-target persons each.

Concurrently calibrate all 11 datasets.

2) Administering CAT tests. This may be done every day.

This uses the item difficulties from 1)

3) Maintaining the item bank and adding new items. This may be done twice a year.

OK?

iyliajamil:

thanks to you..its very helpful.

i will get back to you.after i read the article that you give me the link if i don't understand anything from it.

iyliajamil:

hi..its me again.

i already get the data for my item.

its contain total of 1080 mcq type item, divided into 36 set. i have follow your advice before that is in each set there are 25 unique item and 10 common item (5 common item for the first 5 item in the set and 5 more for the 5 last item).

the question now is:

how am i going to analyze these data. most of the example show only linking method for two set of data.

i'.m using common item equating method.

is it possible to do it all at once those 36 set or i have to do it in pair?

for example:

set 1 with set 2

set 2 with set 3 until set 36 with set 1

i'll be waiting for your reply.

thank you.

iyliajamil:

there is one more question.

usually for item analysis using rasch first step is to look at

the fit table and eliminate the item that is not fit and run again the analysis.

am i correct?

so if we have to do the equating....

do we have to eliminate the item that is not fit on each set of item before we do equating or after we do equating?

Mike.Linacre:

Glad to see you are making progress, Iyliajamil.

1. Analyze each of the 36 sets by itself. Verify that all is correct. Don't worry about misfit yet. Be sure that the person labels for each set include a set identifier.

2. Analyze all 36 sets together. In Winsteps, MFORMS= can help you.

3. Do an "item x set identifier" DIF analysis. This will tell you if some of the common items have behaved differently in different sets. If they have, use MFORMS= to split those common items into separate items.

4. Now worry about misfit - if it is really serious. See: https://www.rasch.org/rmt/rmt234g.htm - in this design, the tests are already short, so we really want to keep all the items that we can.

iyliajamil:

thanks for your swift reply.

i will try to do it.

will get back to you asap.

iyliajamil:

hi, its me again.

what do you mean by including a set identifier?

i tried to create control file using MFORMS= function but not yet try to run it.

now i already done as instructed in (1) and (2) from your previous post. just not sure what a set identifier really means.

i attach to you example of my control file for analyzing each set individually and the control file using MFORMS= that i created following the example. (my data are dichotomous type (0,1)).

thank you.

iyliajamil:

example control file for individual set.

i am not sure if you can open the file that i attach. if can not, can i have your email so that i can send directly to you.

thank you.

iyliajamil:

i labeled person as for example;

person who answer set 1, 0101 to 0134 (example)

person answer set 13, 1301 to 1340 (example)

first two digit represent set number and third and forth represent person id.

is this what you mean by person label with set identifier?

Mike.Linacre:

Yes, iyliajamil.

Now you can do an item by answer-set DIF analysis in a combined analysis of all the subsets.

If 0101 are in columns 1-4 of the person label, then

DIF = S1W2 ; the set number

for an analysis with Winsteps Table 30.

iyliajamil:

thank you, mr. linacre.

i already try to run my control file using MFORMS=, its working.

i'll get back to you after run the DIF analysis.

iyliajamil:

hi mr. linacre.

i have run all my 36 set of test separately, some of it has several misfit item.

i also try to combine all the 36 set into one using mforms .

my problem now is that i don't know how to do the DIF analysis to figure out witch

item that behave differently.

if there are such item, how to separate those item using mforms?

the set identifier, do i need to declare in the control file of each set, if yes, how to do it?

looking forward for your explanation.

thank you.

this is an example of my control file for one set:

&INST

TITLE='ANALISIS DATA SET 1'

ITEM1=5

NI=35

XWIDE=1

NAME1=1

CODES=01

TABLES=1010001000111001100001

Data=set1.dat

&END

i1

i2

i3

i4

i5

i6

i7

i8

i9

i10

i11

i12

i13

i14

i15

i16

i17

i18

i19

i20

i21

i22

i23

i24

i25

i26

i27

i28

i29

i30

i31

i32

i33

i34

i35

END NAMES

MY mforms control file:

TITLE="Combination 36 set test item"

NI=1080

ITEM1=5

NAME1=1

CODES=01

TABLES=1010001000111001100001

mforms=*

data=set1.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=5 ;item 1-5 in colums 5-9

I31-35=10 ;item 31-35 in columns 10-14

#

data=set2.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=10 ;item 1-5 in colums 10-14

I31-35=15 ;item 31-35 in columns 15-19

#

data=set3.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=15 ;item 1-5 in colums 15-19

I31-35=20 ;item 31-35 in columns 20-24

#

data=set4.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=20 ;item 1-5 in colums 20-24

I31-35=25 ;item 31-35 in columns 25-29

#

data=set5.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=25 ;item 1-5 in colums 25-29

I31-35=30 ;item 31-35 in columns 30-34

#

data=set6.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=30 ;item 1-5 in colums 30-34

I31-35=35 ;item 31-35 in columns 35-39

#

data=set7.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=35 ;item 1-5 in colums 35-39

I31-35=40 ;item 31-35 in columns 40-44

#

data=set8.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=40 ;item 1-5 in colums 40-44

I31-35=45 ;item 31-35 in columns 45-49

#

data=set9.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=45 ;item 1-5 in colums 45-49

I31-35=50 ;item 31-35 in columns 50-54

#

data=set10.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=50 ;item 1-5 in colums 50-54

I31-35=55 ;item 31-35 in columns 55-59

#

data=set11.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=55 ;item 1-5 in colums 55-59

I31-35=60 ;item 31-35 in columns 60-64

#

data=set12.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=60 ;item 1-5 in colums 60-64

I31-35=65 ;item 31-35 in columns 65-69

#

data=set13.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=65 ;item 1-5 in colums 65-69

I31-35=70 ;item 31-35 in columns 70-74

#

data=set14.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=70 ;item 1-5 in colums 70-74

I31-35=75 ;item 31-35 in columns 75-79

#

data=set15.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=75 ;item 1-5 in colums 75-79

I31-35=80 ;item 31-35 in columns 80-84

#

data=set16.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=80 ;item 1-5 in colums 80-84

I31-35=85 ;item 31-35 in columns 85-89

#

data=set17.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=85 ;item 1-5 in colums 85-89

I31-35=90 ;item 31-35 in columns 90-94

#

data=set18.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=90 ;item 1-5 in colums 90-94

I31-35=95 ;item 31-35 in columns 95-99

#

data=set19.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=95 ;item 1-5 in colums 95-99

I31-35=100 ;item 31-35 in columns 100-104

#

data=set20.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=100 ;item 1-5 in colums 100-104

I31-35=105 ;item 31-35 in columns 105-109

#

data=set21.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=105 ;item 1-5 in colums 105-109

I31-35=110 ;item 31-35 in columns 110-114

#

data=set22.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=110 ;item 1-5 in colums 110-114

I31-35=115 ;item 31-35 in columns 115-119

#

data=set23.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=115 ;item 1-5 in colums 115-119

I31-35=120 ;item 31-35 in columns 120-124

#

data=set24.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=120 ;item 1-5 in colums 120-124

I31-35=125 ;item 31-35 in columns 125-129

#

data=set25.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=125 ;item 1-5 in colums 125-129

I31-35=130 ;item 31-35 in columns 130-134

#

data=set26.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=130 ;item 1-5 in colums 130-134

I31-35=135 ;item 31-35 in columns 135-139

#

data=set27.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=135 ;item 1-5 in colums 135-139

I31-35=140 ;item 31-35 in columns 140-144

#

data=set28.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=140 ;item 1-5 in colums 140-144

I31-35=145 ;item 31-35 in columns 145-149

#

data=set29.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=145 ;item 1-5 in colums 145-149

I31-35=150 ;item 31-35 in columns 150-154

#

data=set30.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=150 ;item 1-5 in colums 150-154

I31-35=155 ;item 31-35 in columns 155-159

#

data=set31.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=155 ;item 1-5 in colums 155-159

I31-35=160 ;item 31-35 in columns 160-164

#

data=set32.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=160 ;item 1-5 in colums 160-164

I31-35=165 ;item 31-35 in columns 165-169

#

data=set33.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=165 ;item 1-5 in colums 165-169

I31-35=170 ;item 31-35 in columns 170-174

#

data=set34.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=170 ;item 1-5 in colums 170-174

I31-35=175 ;item 31-35 in columns 175-179

#

data=set35.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=175 ;item 1-5 in colums 175-179

I31-35=180 ;item 31-35 in columns 180-184

#

data=set36.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

I1-5=180 ;item 1-5 in colums 180-184

I31-35=5 ;item 31-35 in columns 5-9

*

&END

Mike.Linacre:

iyliajamil, please add your set number to the person label, then use it in your DIF analysis

TITLE="Combination 36 set test item"

NI=1080

ITEM1=7

NAME1=1

NAMELENGTH = 6

DIF=6W2 ; the set number

CODES=01

TABLES=1010001000111001100001

mforms=*

data=set1.txt

L=1 ;one line per person

P1-4=1 ;person label in columns 1-4

C5-6 = "01" ; set 01

I1-5=5 ;item 1-5 in columns 5-9

I31-35=10 ;item 31-35 in columns 10-14

#

data=set2.txt

L=1 ;one line per person

P1-4=1 ;person label in columns 1-4

C5-6 = "02" ; set 02

I1-5=10 ;item 1-5 in columns 10-14

I31-35=15 ;item 31-35 in columns 15-19

#

....

iyliajamil:

ok...i have added those command in the control file for DIF.

what about control file for each individual set, do i need to do anything or leave it as it is?

Mike.Linacre:

iyliajamil, there is no change the control files for each of the 36 datasets unless you want to do something different in their analyses.

The change in MFORMS= is so that you can do a DIF analysis of (item x dataset).

iyliajamil:

I have done it..

But the result does not make sense because the label of item is not the same with mine.

the result came out something like this:

TABLE 10.1 Combination 36 set test item ZOU421ws.txt Sep 5 15:06 2013

INPUT: 1656 PERSONS, 1080 ITEMS MEASURED: 14 PERSONS, 10 ITEMS, 2 CATS 3.57.2

--------------------------------------------------------------------------------

PERSON: REAL SEP.: .00 REL.: .00 ... ITEM: REAL SEP.: .00 REL.: .00

ITEM STATISTICS: MISFIT ORDER

+-----------------------------------------------------------------------+

|ENTRY RAW MODEL| INFIT | OUTFIT |PTMEA| |

|NUMBER SCORE COUNT MEASURE S.E. |MNSQ ZSTD|MNSQ ZSTD|CORR.| ITEM |

|------------------------------------+----------+----------+-----+------|

| 2 2 4 .55 1.07|1.47 1.2|1.56 1.3|A .10| I0002|

| 35 2 4 .62 1.06|1.01 .1| .97 .0|B .51| I0035|

| 31 4 5 -1.08 1.15| .99 .2| .82 .1|C .18| I0031|

| 32 2 4 .62 1.06| .99 .1| .95 .0|c .53| I0032|

| 1 3 4 -.69 1.20| .94 .1| .75 .0|b .32| I0001|

| 5 3 5 -.02 .96| .73 -.9| .67 -.7|a .68| I0005|

|------------------------------------+----------+----------+-----+------|

| MEAN 1.6 4.2 1.32 1.41|1.02 .1| .95 .1| | |

| S.D. 1.4 .4 1.70 .41| .22 .6| .29 .6| | |

+-----------------------------------------------------------------------+

�TABLE 10.3 Combination 36 set test item ZOU421ws.txt Sep 5 15:06 2013

INPUT: 1656 PERSONS, 1080 ITEMS MEASURED: 14 PERSONS, 10 ITEMS, 2 CATS 3.57.2

--------------------------------------------------------------------------------

ITEM CATEGORY/OPTION/DISTRACTER FREQUENCIES: MISFIT ORDER

+-------------------------------------------------------------+

|ENTRY DATA SCORE | DATA | AVERAGE S.E. OUTF| |

|NUMBER CODE VALUE | COUNT % | MEASURE MEAN MNSQ| ITEM |

|--------------------+------------+--------------------+------|

| 2 A 0 0 | 2 33 | .72 1.02 1.9 |I0002 | 0

| 1 1 | 4 67 | .89 .33 1.2 | | 1

| MISSING *** | 1648 100*| .81 .25 | |

| | | | |

| 35 B 0 0 | 2 33 | .41 .36 .9 |I0035 | 0

| 1 1 | 4 67 | 1.15 .39 1.1 | | 1

| MISSING *** | 1648 100*| .76 .27 | |

| | | | |

| 31 C 0 0 | 1 17 | .05 .8 |I0031 | 0

| 1 1 | 5 83 | .40 .39 1.0 | | 1

| MISSING *** | 1648 100*| 1.18 .16 | |

| | | | |

| 32 c 0 0 | 2 33 | .39 .38 .8 |I0032 | 0

| 1 1 | 4 67 | 1.16 .38 1.1 | | 1

| MISSING *** | 1648 100*| .76 .27 | |

| | | | |

| 1 b 0 0 | 1 20 | .01 .7 |I0001 | 0

| 1 1 | 4 80 | .59 .44 1.0 | | 1

| MISSING *** | 1649 100*| 1.01 .22 | |

| | | | |

| 5 a 0 0 | 2 33 | -.14 .16 .6 |I0005 | 0

| 1 1 | 4 67 | .85 .35 .8 | | 1

| MISSING *** | 1648 100*| 1.05 .24 | |

+-------------------------------------------------------------+

�TABLE 10.4 Combination 36 set test item ZOU421ws.txt Sep 5 15:06 2013

INPUT: 1656 PERSONS, 1080 ITEMS MEASURED: 14 PERSONS, 10 ITEMS, 2 CATS 3.57.2

--------------------------------------------------------------------------------

MOST MISFITTING RESPONSE STRINGS

ITEM OUTMNSQ |PERSON

|

high

low|

|

�TABLE 10.5 Combination 36 set test item ZOU421ws.txt Sep 5 15:06 2013

INPUT: 1656 PERSONS, 1080 ITEMS MEASURED: 14 PERSONS, 10 ITEMS, 2 CATS 3.57.2

--------------------------------------------------------------------------------

MOST UNEXPECTED RESPONSES

ITEM MEASURE |PERSON

|

high

low|

|

�TABLE 10.6 Combination 36 set test item ZOU421ws.txt Sep 5 15:06 2013

INPUT: 1656 PERSONS, 1080 ITEMS MEASURED: 14 PERSONS, 10 ITEMS, 2 CATS 3.57.2

--------------------------------------------------------------------------------

MOST UNEXPECTED RESPONSES

+-------------------------------------------------------------------------------------+

| DATA |OBSERVED|EXPECTED|RESIDUAL|ST. RES.|MEASDIFF| ITEM | PERSON | ITEM | PERSON |

|------+--------+--------+--------+--------+--------+-------+--------+-------+--------|

| 0 | 0 | .77 | -.77 | -1.82 | 1.20 | 2 | 100 | I0002 | TAB03 |

| 0 | 0 | .75 | -.75 | -1.75 | 1.12 | 31 | 8 | I0031 | TAB01 |

| 0 | 0 | .67 | -.67 | -1.42 | .70 | 1 | 54 | I0001 | TAB02 |

| 1 | 1 | .35 | .65 | 1.35 | -.61 | 35 | 54 | I0035 | TAB02 |

| 1 | 1 | .36 | .64 | 1.33 | -.57 | 32 | 8 | I0032 | TAB01 |

| 1 | 1 | .37 | .63 | 1.31 | -.53 | 2 | 54 | I0002 | TAB02 |

| 0 | 0 | .54 | -.54 | -1.08 | .15 | 35 | 146 | I0035 | TAB04 |

| 0 | 0 | .54 | -.54 | -1.08 | .15 | 32 | 146 | I0032 | TAB04 |

| 0 | 0 | .51 | -.51 | -1.01 | .03 | 5 | 54 | I0005 | TAB02 |

| 1 | 1 | .56 | .44 | .89 | .23 | 2 | 146 | I0002 | TAB04 |

| 0 | 0 | .43 | -.43 | -.87 | -.28 | 5 | 192 | I0005 | TAB05 |

| 1 | 1 | .60 | .40 | .82 | .39 | 1 | 192 | I0001 | TAB05 |

| 1 | 1 | .69 | .31 | .68 | .78 | 31 | 192 | I0031 | TAB05 |

+-------------------------------------------------------------------------------------+

is it because i labeled all the item in every set the same?

Do i have to label item differently in every set? so that the correct output will be produced by winstep.

Example:

Set 1

a1,a2,a3.......

Set 2

b1,b2,b3......

Set 3

c1,c2,c3......

Mike.Linacre:

iyliajamil, the item labels are place after &END in the Winsteps file that contains your MFORMS= instructions.

For instance:

....

data=set36.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

C5-6 = "36" ; set 36

I1-5=180 ;item 1-5 in colums 180-184

I31-35=5 ;item 31-35 in columns 5-9

*

&END

i1

i2

i3

i4

i5

i6

i7

i8

i9

i10

i11

i12

i13

i14

i15

i16

i17

i18

i19

i20

i21

i22

i23

i24

i25

i26

i27

i28

i29

i30

i31

i32

i33

i34

i35

END LABELS

iyliajamil:

i have done putting item names after &END command and

the output is like this:

TABLE 30.1 Combination 36 set test item ZOU785ws.txt Sep 9 13:44 2013

INPUT: 1656 PERSONS, 1080 ITEMS MEASURED: 14 PERSONS, 10 ITEMS, 2 CATS 3.57.2

--------------------------------------------------------------------------------

DIF class specification is: DIF=6W2

+---------------------------------------------------------------------------------------+

| PERSON DIF DIF PERSON DIF DIF DIF JOINT ITEM |

| CLASS MEASURE S.E. CLASS MEASURE S.E. CONTRAST S.E. t d.f. Prob. Number Name |

|---------------------------------------------------------------------------------------|

| 2 .85< 2.18 3 .87> 2.20 -.03 3.09 -.01 0 .0000 1 i1 |

| 2 .85< 2.18 4 -.08> 2.19 .93 3.08 .30 0 .0000 1 i1 |

| 2 .85< 2.18 5 -1.14> 2.18 1.99 3.08 .65 0 .0000 1 i1 |

| 3 .87> 2.20 4 -.08> 2.19 .96 3.10 .31 0 .0000 1 i1 |

| 3 .87> 2.20 5 -1.14> 2.18 2.02 3.09 .65 0 .0000 1 i1 |

| 4 -.08> 2.19 5 -1.14> 2.18 1.06 3.09 .34 0 .0000 1 i1 |

| 2 -.83> 2.18 3 2.58< 2.17 -3.40 3.08 -1.11 0 .0000 2 i2 |

| 2 -.83> 2.18 4 -.07> 2.18 -.75 3.08 -.24 0 .0000 2 i2 |

| 2 -.83> 2.18 5 .55< 2.18 -1.37 3.08 -.45 0 .0000 2 i2 |

| 3 2.58< 2.17 4 -.07> 2.18 2.65 3.08 .86 0 .0000 2 i2 |

| 3 2.58< 2.17 5 .55< 2.18 2.03 3.08 .66 0 .0000 2 i2 |

| 4 -.07> 2.18 5 .55< 2.18 -.62 3.08 -.20 0 .0000 2 i2 |

| 1 -.79> 2.18 2 .85< 2.18 -1.65 3.08 -.53 0 .0000 5 i5 |

| 1 -.79> 2.18 3 .89> 2.19 -1.68 3.09 -.54 0 .0000 5 i5 |

| 1 -.79> 2.18 4 -.08> 2.18 -.72 3.08 -.23 0 .0000 5 i5 |

| 1 -.79> 2.18 5 .54< 2.18 -1.34 3.08 -.43 0 .0000 5 i5 |

| 2 .85< 2.18 3 .89> 2.19 -.03 3.09 -.01 0 .0000 5 i5 |

| 2 .85< 2.18 4 -.08> 2.18 .93 3.08 .30 0 .0000 5 i5 |

| 2 .85< 2.18 5 .54< 2.18 .31 3.08 .10 0 .0000 5 i5 |

| 3 .89> 2.19 4 -.08> 2.18 .96 3.09 .31 0 .0000 5 i5 |

| 3 .89> 2.19 5 .54< 2.18 .34 3.09 .11 0 .0000 5 i5 |

| 4 -.08> 2.18 5 .54< 2.18 -.62 3.08 -.20 0 .0000 5 i5 |

| 1 .88< 2.17 2 -.84> 2.18 1.72 3.08 .56 0 .0000 31 i31 |

| 1 .88< 2.17 3 .86> 2.20 .02 3.09 .01 0 .0000 31 i31 |

| 1 .88< 2.17 4 -.09> 2.19 .97 3.09 .31 0 .0000 31 i31 |

| 1 .88< 2.17 5 -1.15> 2.18 2.03 3.08 .66 0 .0000 31 i31 |

| 2 -.84> 2.18 3 .86> 2.20 -1.70 3.10 -.55 0 .0000 31 i31 |

| 2 -.84> 2.18 4 -.09> 2.19 -.75 3.09 -.24 0 .0000 31 i31 |

| 2 -.84> 2.18 5 -1.15> 2.18 .31 3.09 .10 0 .0000 31 i31 |

| 3 .86> 2.20 4 -.09> 2.19 .95 3.10 .31 0 .0000 31 i31 |

| 3 .86> 2.20 5 -1.15> 2.18 2.01 3.10 .65 0 .0000 31 i31 |

| 4 -.09> 2.19 5 -1.15> 2.18 1.06 3.09 .34 0 .0000 31 i31 |

| 1 -.79> 2.18 2 .86< 2.18 -1.65 3.08 -.53 0 .0000 32 i32 |

| 1 -.79> 2.18 3 .90> 2.18 -1.69 3.08 -.55 0 .0000 32 i32 |

| 1 -.79> 2.18 4 1.61< 2.18 -2.40 3.08 -.78 0 .0000 32 i32 |

| 2 .86< 2.18 3 .90> 2.18 -.04 3.09 -.01 0 .0000 32 i32 |

| 2 .86< 2.18 4 1.61< 2.18 -.75 3.08 -.24 0 .0000 32 i32 |

| 3 .90> 2.18 4 1.61< 2.18 -.72 3.08 -.23 0 .0000 32 i32 |

| 1 .89< 2.18 2 -.83> 2.18 1.72 3.08 .56 0 .0000 35 i35 |

| 1 .89< 2.18 3 .90> 2.18 .00 3.09 .00 0 .0000 35 i35 |

| 1 .89< 2.18 4 1.61< 2.18 -.72 3.08 -.23 0 .0000 35 i35 |

| 2 -.83> 2.18 3 .90> 2.18 -1.72 3.08 -.56 0 .0000 35 i35 |

| 2 -.83> 2.18 4 1.61< 2.18 -2.44 3.08 -.79 0 .0000 35 i35 |

| 3 .90> 2.18 4 1.61< 2.18 -.72 3.08 -.23 0 .0000 35 i35 |

+---------------------------------------------------------------------------------------+

�TABLE 30.2 Combination 36 set test item ZOU785ws.txt Sep 9 13:44 2013

INPUT: 1656 PERSONS, 1080 ITEMS MEASURED: 14 PERSONS, 10 ITEMS, 2 CATS 3.57.2

--------------------------------------------------------------------------------

DIF class specification is: DIF=6W2

+----------------------------------------------------------------------------+

| PERSON OBSERVATIONS BASELINE DIF DIF DIF ITEM |

| CLASS COUNT AVERAGE EXPECT MEASURE SCORE MEASURE S.E. Number Name |

|----------------------------------------------------------------------------|

| 2 1 .00 .67 -.69 -.67 .85< 2.18 1 i1 |

| 3 1 1.00 .92 -.69 .08 .87> 2.20 1 i1 |

| 4 1 1.00 .81 -.69 .19 -.08> 2.19 1 i1 |

| 5 1 1.00 .60 -.69 .40 -1.14> 2.18 1 i1 |

| 2 1 1.00 .37 .55 .63 -.83> 2.18 2 i2 |

| 3 1 .00 .77 .55 -.77 2.58< 2.17 2 i2 |

| 4 1 1.00 .56 .55 .44 -.07> 2.18 2 i2 |

| 5 1 .00 .30 .55 -.30 .55< 2.18 2 i2 |

| 1 1 1.00 .52 -.02 .48 -.79> 2.18 5 i5 |

| 2 1 .00 .51 -.02 -.51 .85< 2.18 5 i5 |

| 3 1 1.00 .85 -.02 .15 .89> 2.19 5 i5 |

| 4 1 1.00 .69 -.02 .31 -.08> 2.18 5 i5 |

| 5 1 .00 .43 -.02 -.43 .54< 2.18 5 i5 |

| 1 1 .00 .75 -1.08 -.75 .88< 2.17 31 i31 |

| 2 1 1.00 .75 -1.08 .25 -.84> 2.18 31 i31 |

| 3 1 1.00 .94 -1.08 .06 .86> 2.20 31 i31 |

| 4 1 1.00 .86 -1.08 .14 -.09> 2.19 31 i31 |

| 5 1 1.00 .69 -1.08 .31 -1.15> 2.18 31 i31 |

| 1 1 1.00 .36 .62 .64 -.79> 2.18 32 i32 |

| 2 1 .00 .35 .62 -.35 .86< 2.18 32 i32 |

| 3 1 1.00 .76 .62 .24 .90> 2.18 32 i32 |

| 4 1 .00 .54 .62 -.54 1.61< 2.18 32 i32 |

| 1 1 .00 .36 .62 -.36 .89< 2.18 35 i35 |

| 2 1 1.00 .35 .62 .65 -.83> 2.18 35 i35 |

| 3 1 1.00 .76 .62 .24 .90> 2.18 35 i35 |

| 4 1 .00 .54 .62 -.54 1.61< 2.18 35 i35 |

+----------------------------------------------------------------------------+

is it correct now?

now i already can see the item number that is item 1,2,5,31,32,35.

what happen to item 3,4, 33 and 34?

Mike.Linacre:

Thank you for sharing, iyliajamil.

1. Please look at Table 14.1 - are all the items listed correctly?

2. Please look at Table 18.1 - are all the persons listed correctly?

3. Look at the the person labels. In which columns are the set numbers? These should go from 01, 02, 03, 04, .... , 34, 35, 36

4. If the set numbers start in column 5 of the person labels, then

DIF = 5W2

5. Table 30.

iyliajamil:

thanks to you...

1.i have check table 14 it seems that the item labels is correct.

2. but when i check table 18, i found out that the person labels are wrong.

when i analyze those set as individual both table 14 and 18 show correct figure

exp:

for set 1: item lable C1-C5, U0106-U0130, C6-C10 (total 35 item code C for common items and code U is for unique items) person labels start from 0101 to 0132

but when i run my mforms= control file i can not get person labels in table 18 same as in table 18 for individual set analysis

here is my mforms control file again, may be u can detact the mistake that i made:

TITLE="Combination 36 set test item"

NI=1080

ITEM1=7

NAME1=1

NAMELENGTH=6

DIF=6W2 ;the set number

CODES=01

TABLES=1010001000111001100001

mforms=*

data=set1.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

C5-6="01" ;set 01

I1-5=7 ;item 1-5 in colums 7-11

I6-30=12 ;item 6-30 unique set1 in columns 12-36

I31-35=37 ;item 31-35 in columns 37-41

#

data=set2.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

C5-6="02" ;set 02

I1-5=37 ;item 1-5 in colums 37-41

I6-30=42 ;item 6-30 unique set2 in columns 42-66

I31-35=67 ;item 31-35 in columns 67-71

#

.

.

.

.

.

data=set35.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

C5-6="35" ;set 35

I1-5=1027 ;item 1-5 in colums 1027-1031

I6-30=1032 ;item 6-30 unique set35 in columns 1032-1056

I31-35=1057 ;item 31-35 in columns 1057-1061

#

data=set36.txt

L=1 ;one line per person

P1-4=1 ;person lebel in columns 1-4

C5-6="36" ;set 36

I1-5=1057 ;item 1-5 in colums 1057-1061

I6-30=1062 ;item 6-30 unique set36 in columns 1062-1086

I31-35=1087 ;item 31-35 in columns 7-11

*

&END

C1

C2

C3

C4

C5

U0106

U0107

U0108

U0109

U0110

U0111

U0112

U0113

U0114

U0115

U0116

U0117

U0118

U0119

U0120

U0121

U0122

U0123

U0124

U0125

U0126

U0127

U0128

U0129

U0130

C6

C7

C8

C9

C10

U0206

.

.

.

.

.

.

U3627

U3628

U3629

U3630 ;item identification here

END NAMES

i labeled common items C1-C180

example control file for individual set:

SET 1 CONTROL FILE

&INST

TITLE='ANALISIS DATA SET 1'

ITEM1=5

NI=35

XWIDE=1

NAME1=1

CODES=01

TABLES=1010001000111001100001

Data=set1.dat

&END

C1

C2

C3

C4

C5

U0106

U0107

U0108

U0109

U0110

U0111

U0112

U0113

U0114

U0115

U0116

U0117

U0118

U0119

U0120

U0121

U0122

U0123

U0124

U0125

U0126

U0127

U0128

U0129

U0130

C6

C7

C8

C9

C10

END NAMES

SET 2 CONTROL FILE

&INST

TITLE='ANALISIS DATA SET 2'

ITEM1=5

NI=35

XWIDE=1

NAME1=1

CODES=01

TABLES=1010001000111001100001

Data=set2.dat

&END

C6

C7

C8

C9

C10

U0206

U0207

U0208

U0209

U0210

U0211

U0212

U0213

U0214

U0215

U0216

U0217

U0218

U0219

U0220

U0221

U0222

U0223

U0224

U0225

U0226

U0227

U0228

U0229

U0230

C11

C12

C13

C14

C15

END NAMES

really need your help. may be u can give me material to learnt or example that similar to my analysis.so that i can identify where i did wrong.

thanks you.

Mike.Linacre:

iyliajamil, you say: "when i run my mforms= control file i can not get person labels in table 18 same as in table 18 for individual set analysis"

Reply: for the DIF analysis, we need the MFORMS table 18 person labels to be:

"Table 18 for individual set analysis" (4 columns) + set number (2 columns)

DIF = 5W2 ; the set number in the person label

iyliajamil:

thanks to you.

i get it already.

the labels in table 14 and 18 i get it correct for item labels and person labels

but not when i open edit mforms= file the data are not arrange accordingly

suppose it come out for exampel:

01010111111..........11111

020102 ..................10011....................11101

030103................................................00111..................00000

.

.

.

350135...................................................................... ....11111.............00101

36013600001........................................................................................11111

but it did not

is something wrong with the arrangement?

only data for set 1 come out correctly

the others only person labels appear

Mike.Linacre:

iyliajamil, the problem may be that I have misunderstood your data layout.

Please post a data record from set1.txt and a data record from set2.txt. They must look exactly like they look in the data files.

For example,

set1.txt

456710101010101010101010

set2.txt

985401010101010101010101

iyliajamil:

thank you...

i have figure out where is the mistake in my mforms control file and i have corrected it.

i will continue with the analysis.

now i am correcting the arrangement of data in my mforms control file using your tutorial on concurrent common item equating.

will get back to you later.

thank you again for your help.

Mike.Linacre:

Excellent, iyliajamil.

iyliajamil:

hi again,

mr linacre.

i have success linking 36 sets of item contains 1080 mcq item in total (180 common items and others unique items) using common items concurrent equating methods with mforms function. this bank item is for CAT usage. so...the 'measure' value will be use as the difficulty level of each item. usually those item that fit ( MNSQ infit and outfit value 0.7 to 1.3 for MSQ type) will be administered in the item bank.am i correct?

you have suggested using DIF function across set to identify the common items that behave differently across sets.those common items should be separated also using mforms function. am i correct?

there is a few question for you...

1. I have read through winsteps manual on DIF function and find it complicated to understand. So for the purpose of my study that is not mainly on DIF analysis , only use DIF to identify common items that behave differently. is it enough if i look at common rule of thumb that say: consider the item has DIF if the p value <0.05 and t value >2 to make conclusion that those common items behave differently between sets compared?

2.Why we need to separate the item that have DIF into different group?let say item DIF identify in common item C4 compared between set 1 and set 2. if we separate it, does it means that C4 have different measure value for person answering set 1 and set 2?

3. How to do the separation procedure? i have read through but find it difficult to understand, may be because the example is way to different from my data type. can you explain it to me in more simple way to understand?

4. Let say, i manage to do the separation procedure, can i still use the common item in my item bank? does it have different measure value?if it has different measure value which one should i use the measure value from focal group or from reference group?

5. I came across the fact that says DIF researchers suggested group size of at least 200 for DIF study. my group size minimum only 30. so is it ok using DIF for my case?

that is all for now, waiting for your explanations. thank you :)

Mike.Linacre:

iyliajamil,

Do not worry about the DIF analysis. Your group sizes are only 30, so that the DIF findings are very weak.

Your fit criteria are strict (0.7 to 1.3 for MSQ type) and are much more demanding than the DIF criteria. The fit criteria are enough.

iyliajamil:

ok...

so...i do not need to do DIFt analysis for my data.

just use the result from mform containing all the combined data from set 1 to 36. look into fit criteria only to make decision on keeping the item or remove it from the item bank.

am i correct?

thank you for you explanation. it helps me a lot. :)

Mike.Linacre:

iyliajamil,

DIF analysis is part of quality control. We usually like to reassure ourselves that the common items are maintaining their relative difficulty across sets. But we don't have to do this. It is like looking at the 4 tires of your car to verify that they are all about equally inflated.

iyliajamil:

thank you for all the explanation.

:)

iyliajamil:

hi mr. linacre.

Let say we want to administer 3 test with 10 item in a set of test (dichotomous type). How many common item needed if we want to link those test using common item equating method?

Mike.Linacre:

iyliajamil, the minimum number is 3 items, but 5 items would be safer.

223. distribution free

uve January 31st, 2012, 2:18am:

Mike,

If you measure my height with a tape measure, the result is not dependent on other people whom you are also measuring at the same time. My height will be the same (error taken into consideration) regardless of anyone else being measured with me. Yet this is not true of person ability or item difficulty. Remove a person or item from the analysis and the results could be quite different. So for example, an item's true difficulty doesn't seem to be intrinsic because it could vary significantly depending on the other items incorporated into the analysis. This is quite contrary to height, and the reason I mention height is that we use it so frequently in our communications to illustrate the Rasch model. How then can we say that the measures we develop are truly distribution free when in fact they seem to be highly dependent upon each other?

Using the Rasch model, we can predict the probability of a correct score given the item difficulty and ability level of the person. I understand how among other things, this can help us with fit. However, if items change based on other items in the assessment, the fit results will also change. Though there is great logic in the math used to develop the Rasch models, there seems to be a great deal of fate involved with the ultimate outcome, something not as prevalent in standard test statistics.

I'm probably approaching all this from an invalid comparison, but I would greatly appreciate your comments.

Mike.Linacre:

Uve, you have identified several problems here:

1. The problem of defining the local origin (zero point).

2. The problem of the sample of objects used to construct the measurement system.

3. The situation in which the measurement takes place

It is instructive to review the history of the measurement of temperature.

The very earliest thermometers looked like modern-day open-top test tubes and strongly exhibited problem 3 - until their manufacturers realized that air pressure was influencing their equipment. Then they sealed the top of the glass tube to eliminate air pressure as a variable and problem 3. was largely solved.

Galileo's thermometer - http://en.wikipedia.org/wiki/Galileo_thermometer - has problems 1. and 2. in its original version. See also my comment about the Galileo thermometer at https://www.rasch.org/rmt/rmt144p.htm

The problem of the local origin (zero point) was initially solved for thermometers by choosing definitive objects, e.g., the freezing point of water at a standard air pressure. The problem of the sample of objects was solved by choosing another definitive object, e.g., the boiling point of water at a standard air pressure.

We are heading in this direction with Rasch measurement. The Lexile system is a leading example - https://www.rasch.org/rmt/rmt1236.htm - but we have a long way to go.

In situations where this is true, "remove a person or item from the analysis and the results could be quite different", then the structure of the data is extremely fragile and findings are highly likely to be influenced by accidents in the data. In general, if we know the measures estimated from a dataset, we can predict the overall effect of removing one person or one item from that dataset. provided that person or item has reasonable fit to the Rasch model.

In the more general situation of change of fit, e.g., using the same test in high-stakes (high discrimination) and low-stakes (low discrimination) situations, then the "length of the logit" changes - https://www.rasch.org/rmt/rmt32b.htm

But we are all in agreement that our aim is to produce truly general measures: https://www.rasch.org/rmt/rmt83e.htm - meanwhile, we do the best that mathematics and statistics permit :-)

uve:

Thanks Mike. These are great resources and you've given me much to think about.

Emil_Lundell:

Hello, Dr. Lineacre

You did a more comprehensible review about the measurement history of temperature that explains the points Duncan left implicit in his book (1984). Have you published your example, using better references, anywhere?

Best regards.

Mike.Linacre:

Emil, my comments about thermometers are in Rasch Measurement Transactions, but there almost no references.

In discussing the history of temperature in a Rasch context, Bruce Chopping blazed the path. Bruce Choppin "Lessons for Psychometrics from Thermometry", Evaluation in Education, 1985, 9(1), 9-12, but that paper does not have any references.

Many authors of Rasch papers mention thermometers in order to make abstract measurement concepts more concrete, but they do not have references related to thermometers.

Emil_Lundell:

Thanks,

I will quote this the next time I write a paper about rasch.

//E

P.s. The important thing for the reader is that Wikipedia isn't mentioned and that the reference doesn't go to a internet forum.

225. sample size for FACETS of a rating scale

GiantCorn October 19th, 2012, 7:54am:

Hi everyone, I'm new to this forum and hope to learn a lot.

We are currently developing a rating scale to assess EFL students in a short speaking test. There will be 3 constructs on the scale (lexico-grammar, fluency, interaction skills) and the scale runs from 1 - 5 and half points can be given as well.

In order to evaluate this scale (in terms of how well it conforms to expectations about its use. i.e. do raters use all of the scale? consistently? is each construct robust etc?) can anyone provide a good guide on how to conduct a scale analysis using FACETS?

Also we are in a fairly small scale but busy department. Would it be possible to run such an analysis on the rating scale using only 3 raters (out of 14 we have) all rating say 10 video performances? each video has a pair of candidates. would this be a large enough sample for an initial trial of the rating scale? could we get any useful information on the scales performance to make changes and improvements? or would we need far more raters and /or videos?

Many thanks for your help in advance!

GC

Mike.Linacre:

Welcome, GC.

Here's a start ....

" the scale runs from 1 - 5 and half points can be given as well" - so your rating scale has 9 categories. The proposed dataset is 3 raters x 20 candidates = 60 observations for each construct = 7 observations for each category (on average). This is enough for a pilot run to verify scoring protocols, data collection procedures and other operational details, and also enough to investigate the overall psychometric functioning of the instrument. Precise operational definitions of the 9 categories may be the most challenging aspect.

"do raters use all of the scale? consistently? is each construct robust etc?"

Only 3 raters is insecure. (Notice the problems in Guilford's dataset because he only had 3 raters.) So 5 raters is a minimum. Also the choice of candidates on the videotapes is crucial. They must cover the range of the 9 categories for each construct, and also exhibit other behaviors representative of the spectrum of candidate behavior likely to be encountered.

GiantCorn:

Mike,

thanks very much for your advice. I shall proceed as advised!

GC

GiantCorn:

Hi Mike,

Ok i finally got round to building the data table and my spec file (my first one in a very long time) but something has gone wrong - for some reason in the output it says that some of the half-point scores: -

"are too big or not a positive integer, treated as missing"

What's going wrong here?

Also on my Ruler table I would like each of the rating scale constructs (Fluency, Lexicogrmr, Interaction) to display so i can compare them but when i play about with the vertical= option nothing happens. How should i get each rating scale to display in the table?

I attach my spec file, data file for you. I'm sure I'm probably missing something very easy/obvious here......

Thank you for any help you may be able to offer.

GC

Mike.Linacre:

GC, good to see you are progressing.

1. Your Excel file:

A data line looks like this:

1 1 1 1-3a 1 2 1.5

Facets only accepts integer ratings, so please multiply all your ratings by 2, and weight 0.5

1 1 1 1-3a 2 4 3

Models=

?,?,?,?,R10K,0.5 ; highest doubled rating is 10 (K means Keep unobserved intermediate categories). Weight the ratings by 0.5

2. Rating scale constructs. Do you want each element of facet 4 to have its own rating scale? Then ...

Models=

?,?,?,#,R10K,0.5 ; # means "each element of this facet has its own rating scale"

*

GiantCorn:

Mike,

1) Aha! thanks for a poke in the right direction, I think I've got it, Because im using actually an 11 point scale (if using half points from 0-5) I need to reconvert the raw scores to reflect the scale right?

So rater 1 giving a 2.5 for fluency should be 6 on the data sheet. Is this correct?

2) Thus some of my spec file commands need adjustment also. For example the model statement you gave above would be ?,?,?,#,R11

3) I remember reading a paper about a similar rubric where the author argued that the use of PCM would be better than RSM as it was assumed that each rating scale used different criteria that measure different constructs along a common 9 point scale. Would you agree that it would be better for me to use PCM over RSM in this case? It is chiefly the scales I am interested in.

Mike.Linacre:

GC:

1) 0-5 x 2 = 0-10 -> R10

2.5 x 2 = 5

2) ?,?,?,#,R10

3) PCM or RSM? This is discussed in another thread. Please look there ....

GiantCorn:

Dear Mike and others,

Haven't had a chance to write until now. But i have finally managed to run the data on this speaking test rubric we are experimenting with and thought I'd update you while also checking my thinking on a few points. I have attached the output.

Would you agree with the following brief comments regarding this piloting: -

1) seems like data methods and my setup of the spec file was ok, after your help, thnx mike!

2) I probably need more candidates for the middle section of the scales

3) It appears that, from the category probability curves, there is over categorisation for all 3 constructs but this is probably due to the small sample and fact i couldnt get videos that specifically fall into each category exactly. This may be an issue but the severity is too difficult to say at this stage. need more video performances.

4) the scale steps for each construct are not in-line suggesting (quite naturally I would assume) that these constructs are learned/acquired at different rates. Might there be argument for each to be weighted differently in a students overall score?

5) Is my following thought pattern correct regarding the nature of the 3 constructs (fluency, lexicogrmr and interaction): -

outfit msq and ICCs for each construct suggest they adhere to the rasch concept and that the categories and scale steps seem logical and sequential. Except, arguably, at the lower end of the ability scales but i would argue this noise is expected at this end due to the nature of beginning/early language acquisition?)

Model, Fixed (all same) chi-square significance value of .06 (Fig. 1) suggests that there is a 6% probability that all the constructs measures are the same. So does this mean that each construct probably does add some unique element to the overall measure of "English Speaking skill"? Or have i misunderstood something?

Thanks for your time and patience Mike!

GC

Mike.Linacre:

GC,

Overall the specifications and analysis look good, but did you notice these?

Warning (6)! There may be 3 disjoint subsets

Table 7.3.1 Prompt Measurement Report (arranged by mN).

+---------------------------------------------------------------------------------------------------------------+

| Total Total Obsvd Fair-M| Model | Infit Outfit |Estim.| Correlation | |

| Score Count Average Avrage|Measure S.E. | MnSq ZStd MnSq ZStd|Discrm| PtMea PtExp | N Prompt |

|-------------------------------+--------------+---------------------+------+-------------+---------------------|

| 147 30 4.9 4.92| .41 .17 | .87 -.4 .85 -.5| 1.18 | .94 .93 | 3 Food | in subset: 3

| 89.5 15 6.0 5.60| -.18 .23 | .92 -.1 .90 -.1| 1.13 | .92 .93 | 2 Last Summer | in subset: 2

| 187 30 6.2 5.67| -.23 .18 | .96 .0 .91 -.1| .93 | .94 .94 | 1 Hobbies | in subset: 1

|-------------------------------+--------------+---------------------+------+-------------+---------------------|

These "subsets" messages are warning us that there are three separate measurement systems. "Food", "Last Summer" and "Hobbies".

We cannot compare the measures of "Food" and "Last Summer", nor can we compare the measures of "Shinha" and "Scalcude", because they are in different subsets.

So we must choose:

Either (1) the prompts are equally difficult. If so, anchor them at zero.

Or (2) the groups of students who responded to each prompt are equally able. If so, group-anchor them at zero.

Rating scales: the samples are small and there are many categories, so the rating-scale structures are accidental. The probability curves in Tables 8.1, 8.2, 8.3 look similar enough that we can model all three constructs to share the same 0-10 rating scale, instead of 3 different rating scales. This will produce more robust estimates. See www.rasch.org/rmt/rmt143k.htm

GiantCorn:

Mike,

Sorry i have been away from the office and have only just seen this reply. Thank you for your help so far it has been most useful.

Yes i had seen those subset warnings and for the purposes of this analysis will follow your advice (1) assume the prompts are equal and anchor them. About the rating scales - yes i see. I will need many more data points for each category of each construct (minimum 10 right?) before being able to say anything about this aspect of the analysis.

I will continue and try to process a much larger data set this coming semester.......

Thank you once agian for your help thus far!

Mike.Linacre:

Good, GC.

You write: "before being able to say anything about this aspect of the analysis."

You will be able to say a lot about your rating scale, but what you say will be tentative, because your findings may be highly influenced by accidents in the data. For instance, the Guilford example in Facets Help has only a few observations for many of its categories, but we can definitely say that the rating-scale is not functioning as its authors intended.

242. Rater Bias without affecting measurement

Tokyo_Jim December 2nd, 2012, 6:29am:

Hi Mike,

I'm working on an analysis where 3-4 teachers rate student performances, and the students then add their own self-ratings after watching their performance on video. We are interested in severity, accuracy, and consistency of the self-raters and specifically want to examine the distribution of self bias scores. I have designated all 'self' ratings as a single element. We can see that "self" is generally severe, has poor fit, and produces a larger number of unexpected observations.

What we'd like to do is see how 'biased' the self-raters are in comparison to the expert raters, without the self ratings affecting the measurement estimation. Not sure how to set this up. I can model the "Self" element as missing, but in that case, it can't be examined for bias interactions. If I anchor "self" to zero while leaving the "expert" elements free, severity ratings are calibrated to "self = zero." but it seems the self-rating is still influencing measurement, as the rank order of student measures is different from the order produced when "self" is modeled as missing.

Is there a way to set this up so that we can produce bias charts and values, without the non-expert rating affecting the student calibrations?

Mike.Linacre:

Thank you for your question, Jim.

There are two ways to do this.

1)

Set the "Self" to missing. Analyze the data. Produce an Anchor file=anc.txt

Edit the Anchor file to add the "Self" element. Analyze the anchor file. The "Self" will be analyzed without changing the anchored measures.

or

2) Weight the "Self" observations very small, such as R0.0001, or with a Models= specification with very small weight: 0.0001

Tokyo_Jim:

Thank you for the very quick answer Mike. Both options are clear. The second one looks easiest.

Tokyo_Jim:

Hello Mike,

A follow up to this previous question. We would like to use the Self-evaluation bias size as a variable in other analyses. I created a rater by student bias report and found what I was looking for in Table 13 (Excel, Worksheet), Students relative to overall measure. Only problem is the bias analysis is limited to 250 cases, and we have N = 390. I do see the same information in Table 13.5.1 in Notepad, but can't export it.

Is there an easy way to get or create this file for all cases is a way that can conveniently converted to an SPSS variable?

TIA

Jim

Mike.Linacre:

Jim,

1. Copy the rows you want from Table 13.5.1 in NotePad into an Excel worksheet

2. In Excel, "Data", "Text to Columns"

This should give you the numbers you need in columns, suitable for importing into SPSS

Tokyo_Jim:

Thanks Mike. It worked

265. Longitudinal Growth Measures

uve December 19th, 2012, 11:48pm:

Mike,

In our district, secondary students typically take 4 quarterly exams in math for the year. These usually vary in length between 30 and 50 items. Recently I rectangular copied the responses to calibrate items using all four exams in a concurrent equating process. So I now have ability measures based on all 170 items. I'd say there is probably 80 to 90 percent respondent overlap, so the four different exams are well linked. The problem is that each covers mostly different material. There are no identical Q2 items on Q1, etc. What I'd like to do is see if I can determine if a student's measure has changed significantly across the exams. Now that the items are calibrated together, here would be my next steps:

1) Calibrate ability levels for Q1 using only the items found on Q1 anchored to the equated 170-item version.

2) Repeat for Q2-Q4.

3) To determine significant year change: Q4 score - Q1 score/SQRT(S.E.^2 Q4 + S.E.^2 Q1). Not sure about degrees of freedom, maybe total items answered between the two tests minus one?

Would this work?

Mike.Linacre:

Uve, is this the situation?

a) There are 4 separate tests with no common items: Q1, Q2, Q3, Q4 (30 to 50 items each)

b) Students have responded to one or more of the tests.

c) Student abilities have changed between tests.

If this is correct, then the tests seesions do not have common items nor common persons, and so cannot be co-calibrated.

Since both the students and the items have changed between tests, then "virtual equating" (equating on item content) is probably the most successful equating method: https://www.rasch.org/rmt/rmt193a.htm

After equating the tests, then the 4 measures for each student can be obtained. Then your 3) will apply, with a little extra due to the uncertainty of the equating.

For significance t-statistic, use Welch-Satterthwaite https://www.winsteps.com/winman/index.htm?t-statistics.htm

uve:

Mike,

a) yes

b) most students have responded to all 4 four

c) The mean and std dev ability measures are different: .38/1.04, -.07/.84, -.12/.95, and -.23/.86 (mean and std dev respectively)

If one student takes exams 1-3 and another takes 2-4, then both have taken 2 and 3. Wouldn't this mean that 1 and 4 are now on the same scale? I thought this represented a common person equating procedure.

I've attached an example of the response file. The dashes refer to skipped items and the X's refer to items from an exam the respondent did not take. The dashes are scored incorrect and the X's are not counted against the respondent.

Mike.Linacre:

Uve, are we expecting the students to change ability between Qs? If so, the students have become "new" students. Common-person equating requires that students maintain their same performance levels.

uve:

:-/

I want to be able to compare change over time between the Q's. Since these exams were initially calibrated separately, I didn't feel that comparing scores was viable. I wanted to place the items onto one scale if possible even though there were no common items. I had hoped the common persons would faciliate this, but it seems not.

If we can only use common person equating if we expect no change between Q's then what would be the point of linking them in the first place?

Mike.Linacre:

Uve, in the Q design, we usually want to track the change of ability of each student across time.

"Virtual equating" is a technique to equate the Q tests.

Alternatively, a linking test is constructed using a few items from each of the Q tests. This test is administered to a relevant sample of students who have not seen the Q tests.

uve:

Mike,

Sorry for the late response. As you probably know well by now, it often takes me quite some time to digest all your responses, sometimes months! :)

I suppose I could use the method you mentioned, but I guess I'm trying to work with data I already have. Remember, we give over 100 exams each year. Teachers feel we test too much as it is, so administering a linking test would be out of the question. I would need curriculum staff to review the standards to provide me the info I need to do virtual equating since I am not a content expert. Because of severe budget cuts, time and resources are constrained beyond reason. They would not be able to assist me.

So that brings me back to my original question, and perhaps the answer is still no. But I was hoping that since at least 70% of a given grade level takes all the benchmark exams (probably closer to 80-90%) in the series throughout the year, there is enough overlap that I could calibrate all items together. So if 500 kids all take Q1-Q4 out of 700 kids total, then wouldn't this be the common person group I would need to use common person equating to link the items? Couldn't I take each person's response to all Q's, string them together in Winsteps and calibrate all the items at once?

Mike.Linacre:

Uve, if there are 4 tests with no common items, and the students change ability between test administrations, it appears that there is no direct method of comparing the tests. But perhaps someone else knows of one. The Rasch Listserv is a place to ask: https://mailinglist.acer.edu.au/mailman/listinfo/rasch

Antony:

Hi, Mike. I am running a similar test. The same cohort of students completed 4 subscales from Questionnaire A. A year later, the same subscales were administered, with item order randomized. I suppose students' responses would change due to changes of some attributes (say self-concept). Is this sound? Would the randomization matters?

Mike.Linacre:

Antony, reordering the items should not matter (provided that the Questionnaire continues to make sense).

Analyze last year's data and this year's data separately, then cross-plot the two sets of person measures and the cross-plot the two sets of item difficulties. The plots will tell the story ....

Antony:

Thank you Mike.

I have read articles studying cohort effect by Rasch-scaling Ss' responses. Results were listed clearly, but articles skip the technical know-how. Would you please refer me to other posts or useful websites?

Mike.Linacre:

Antony, there is usually something useful on www.rasch.org or please Google for the technical know-how.

280. Odd dimensionality pattern

uve October 16th, 2012, 5:20am:

Mike,

We have implemented a new elementary English adoption and have begun our first set of assessments. I'm finding identical dimensionality distributions like the one attached. I'm probably not recalling accurately, but for some reason I think I remember a similar post with residual patterns loading like the one below. Any guesses as to what may be causing this?

Mike.Linacre:

Yes, Uve. This pattern is distinctive. The plot makes it look stronger than it really is (eigenvalue 2.8). We see this when there is a gradual change in behavior as the items become more difficult, or similarly a gradual change in item content. Both can have the effect of producing a gradual change in item discrimination.

Suggestion: in Winsteps Table 23.3, are the loadings roughly correlated with the fit statistics?

uve:

Mike,

Below are the correlation results.

loading measure Infit Outfit

loading 1

measure -0.856533849 1

Infit -0.85052961 0.781652331 1

Outfit -0.866944739 0.868780613 0.9363955 1

Mike.Linacre:

Yes, Uve, the high correlation between Infit and Outfit is expected. All the other correlations are high and definitely not expected. Measures (item difficulties) are negatively correlated with Outfit. So it seems that the most difficult items are also the most discriminating. The "dimension" on the plot appears to be "item discrimination".

uve:

Mike,

My apologies for the bad correlation matrix, but I see that the Measures are positively correlated to outfit, not negatively as you stated. Maybe I'm reading it wrong. Anyway, if I'm right, would that change your hypothesis?

Mike.Linacre:

Oops, sorry Uve. But the story remains the same ....

uve:

Mike,

Here's another situation that's very similar except it appears there are two separate elements to the 2nd dimension of item discrimination. Any suggestions as to what might cause something like this?

Mike.Linacre:

Uve, the person-ability correlation between clusters 1 and 3 is 0.0. This certainly looks like two dimensions (at least for the operational range of the test). Does the item content of the two clusters of items support this?

uve:

Yes, it appears that all but one of the 8 items in cluster 1 are testing the same domain, while all but 4 of the of the 11 items in cluster 3 are the same domain.

Mike.Linacre:

Great! It is always good to see a reasonable explanation for the numbers, Uve. :-)

uve:

Mike,

I am revisiting this post because I am attempting to define the 1st component in the second example. I have three additional questions:

1) In reference to the first example, you suggested I correlate the loadings with the fit statistics. After doing this and providing the matrix, you focused on the measure/outfit correlation, not the loading/outfit correlation. Why?

2) How does the measure/outfit correlation suggest discrimination?

3) In reference to the second example, you stated that the correlation between the two items clusters suggested two dimensions. However, it was my understanding that each PCA component is measuring a single orthogonal construct. How then can the 1st component be two dimensions?

Thanks again as always

Mike.Linacre:

OK, Uve ... this is going back a bit ....

1) "you focused on the measure/outfit correlation, not the loading/outfit correlation. Why?"

Answer: The measure/outfit correlation is a correlation between two well-understood aspects of the data. The loadings are correlations with hypothetical latent variables. So it is usually easier to start with the known (measures, outfits) before heading into the unknown (loadings).

2) High outfits (or infits) usually imply low discrimination. Low outfits (or infits) imply high discrimination. So we correlate this understanding with the measures.

3) A single orthogonal construct is often interpreted as two dimensions. For instance, "practical items" vs. "theory items" can be a single PCA construct, but is usually explained as a "practical dimension" and a "theory dimension". We discover that they are two different "dimensions" because they form a contrast on a PCA plot.

uve:

Mike,

Two more questions then in relation to your answer to #3:

1) The first example has a single downward diagonal clustering of residuals the construct of which you suggested might be gradual discrimination. I assume then that this is the 2nd construct the two dimensions of which I would need to interpret. These two dimensions bleed into one another gradually which results in the single downward grouping we see. Would these last two comments be fair statements?

2) The second example has two sets of downward diagonal clusterings of residuals also suggesting possible discrimination though I am baffled by the overlap and separation of the two clusters. A single construct appears to be elusive but its two dimensions are much easier to interpret because they load predominantly on just two math domains.

So it appears I have two opposite sitautions here: the first 2nd construct appears rather easy to interpret but its two dimensions are elusive, while the second 2nd construct is rather elusive but its two dimensional elements are much easier to interpret.

Mike.Linacre:

Yes, Uve. There is not a close alignment between the mathematical underpinnings (commonalities shared by the correlations of the residuals of the items) and the conceptual presentation (how we explain those commonalities). In fact, a problem in factor analysis is that a factor may have no conceptual meaning, but be merely a mathematical accident. See "too many factors" - https://www.rasch.org/rmt/rmt81p.htm

uve:

Mike,

Very interesting and very illuminating. Thanks again for your valuable insights and help.

284. How do you think about these?

dachengruoque December 31st, 2012, 2:07pm:

"It says;

The Winsteps "person reliability" is equivalent to the traditional

"test" reliability.

The Winsteps "item reliability" has no traditional equivalent....[item

reliability is] "true item variance / observed item variance"."\

I quoted from language testing community discussion list. How do you think about them, Professor Linacre? Thanks a lot for your insightful and precinct explanation on Rasch of 2012. Happy new year to you and all Rasch guys!

Mike.Linacre:

Those are correct, Dachengruoque.

We go back to Charles Spearman (1910): Reliability = True variance / Observed variance

"Test reliability" should really be reported as "Reliability of the test for this sample", but it is often reported as though it is the "Reliability of the test" (for every sample).

dachengruoque:

Therefore, item reliability in the classical test theory is sample based and could vary from one sample to another while as Rasch-unique reliability test reliability is sample-free or constant for every sample. Could I understand like that? Thanks a lot for your prompt feedback and citation of literature.

Mike.Linacre:

Dachengruoque, reliabilities (Classical and Rasch) are not sample-free.

The person (test) reliability depends on the sample-distribution (but not the sample size) of the persons

The item reliability depends on the sample-size of the persons, and somewhat on the sample distribution of the persons.

dachengruoque:

Thanks a lot, Dr Linacre!

285. Opposite of logic/intentions

drmattbarney December 29th, 2012, 12:08pm:

Thank you for your analysis, Matt.

Looking at Table 7.5.1, Facet 5 is oriented positively, so that higher score -> higher measure

"global" has an observed average of 5.1 and a measure of -2.03

"small changes" has an observed average of 6.4 and a measure of 2.19

In Table 8.1, "5" is "med" and "6" is "agree"

What are we expecting to see here?

drmattbarney:

thanks for your fast reply, as always, Mike.

To give more context, the scale is a persuasion reputation scale - consistent with Social Psychologist Robert Cialdini's assertion that the grandmasters of influence have a reputation for successfully persuading highly difficult situations.

If you look at the item content in Table 7.5.1, the qualitative meaning of the easiest item, logit -2.03 relates to the board-of-directors level - the highest possible level in an organization, so this should be exceedingly difficult to endorse, not very easy.

Similarly, the item content of the item with a logit of 2.19 involves very easy persuasion tasks of persuading small changes. Taken together, it looks to me like the raw data are recoded, as the items fit the Rasch model but they are qualitatively the opposite of what should be expected (Board persuasion > small changes).

I'm not too worried about Table 8.1....it's reasonably okay. but Table 7.5.1's qualitative item content looks absolutely backwards.

Hopefully that clarifies

Thanks again

Matt

Mike.Linacre:

Yes, Matt, it looks like there are two possibilities:

1) data recording somewhere.

2) misunderstanding of the items (paralleling my misunderstanding).

In his Questionnaire class, Ben Wright remarked that respondents tend not to read the whole prompt, but rather pick out a word or two and respond to that. A typical symptom of this is respondents failing to notice the word "not" in a prompt, and so responding to it as a positive statement.

drmattbarney:

thanks, Mike and Happy New Year

286. understanding results

JoeM December 30th, 2012, 11:47pm:

silly question: I always thought that a person's ability was based on which answers they got correct, not necessarily how many questions they got correct (a person who got 3 hard questions correct would have a higher ability than a person who answered 3 easy questions correct). but the simulations I have created are not showing this... all persons who got 15 out of 20 have the same ability, regardless of the difficulty of the items they answered correctly...

if this is the case, how would I weigh the answers so they affect the instrument participants based on which answers they got correct verses how many they correctly answered...

Mike.Linacre:

JoeM, it sounds like you want "pattern scoring".

Abilities based on solely raw scores (whatever the response pattern) take the position that "the credit for a correct answer = the debit for an incorrect answer". Of course, this is not always suitable. For instance, on a practical driving test, the debit for an incorrect action is far greater than the credit for a correct action. In other situations, it may be surmised that the extra ability evidenced by success on a difficult item outweighs mistakes on items that are "too easy".

In Winsteps, we can trim "unexpected successes on very difficult items (for the person)" (= lucky guesses") and/or "unexpected failures on very easy items (for the person)" (=careless mistakes), by using the CUTLO= and CUTHI= commands. www.winsteps.com/winman/cuthi.htm

287. interaction report

Li_Jiuliang December 21st, 2012, 4:09am:

Hi professor Linacre,

I have some problems with interpreting my FACETS interaction output which is like this (please see attached).

There is some confusion as to the target contrast in Table 14.6.1.2. I don¡¯t have problem with 1 MIC and 2 INT. However, for 4 SU, I think the contrast should be .41£ .22=.19, why the table gives me .20? Also for 3LU, I think it should be £.34£(£.59)= .25, then why the table gives me .26?

Thank you very much!

Mike.Linacre:

Thank you for your post, Li Jiuliang.

The reason is "half-rounding".

Please ask Facet to show you more decimal places:

Umean=0,1,3

Or "Output Tables" menu, "Modify specifications"

Li_Jiuliang:

thank you professor Linacre! i got it!

288. Problem with interpretation

marlon December 21st, 2012, 10:18am:

Goodmorning prof. Linacre,

Goodmorning Rasch-people,

I am doing my Rasch analyses for couple of years and would like to share with you the case I am not sure how to interpret. Maybe you could help me?

I conducted my Rasch analyses on the sample of more than 50.000 students. My analyses of several items resulted in a very good fitting statistics for items. Most of them have OUTFITs and INFITs in the limits of .8-1.2. The hierarchy of items seems valid and reasonble.

In the same time, I faced the problem connected with the value of person reliability indexes which are quite low (see below):

SUMMARY (EXTREME AND NON-EXTREME) Person:

REAL RMSE .60 TRUE SD .58 SEPARATION .97 Person RELIABILITY .49

MODEL RMSE .58 TRUE SD .60 SEPARATION 1.05 Person RELIABILITY .52

-------------------------------------------------------------------------------

Person RAW SCORE-TO-MEASURE CORRELATION = .99

CRONBACH ALPHA (KR-20) Person RAW SCORE "TEST" RELIABILITY = .49

Where should I look for the solution for this problem?

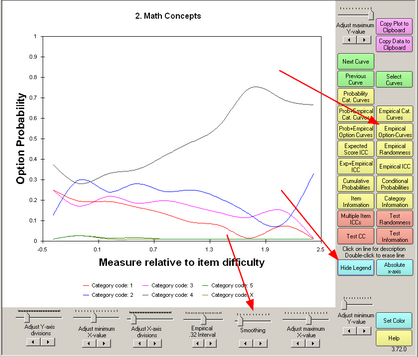

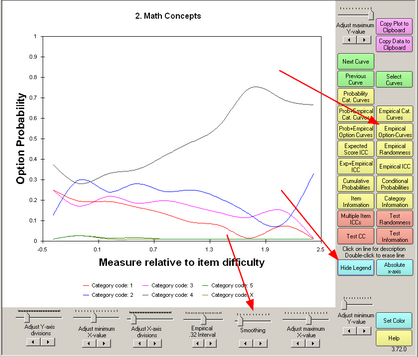

I consider the hypothesis that in this particular test the categories of items are not very much discirminating between good and bad students. I've noticed that the categories of the items seem to be very close (too close?) to each other at the picture with empirical item-category means of the Rasch measures (see the pictre attached).

Am I right?

Is there the posibility to create the test with better realiability using this items on this sample?

Thank you for the help in advance.

Marlon

Mike.Linacre:

Thank you for your questions, Marlon.

As Spearman (1910) tells us: Reliability = True Variance / Observed Variance.

And: Observed Variance = True Variance + Error Variance

This is shown in your first Table. Let's use the "model" numbers:

True S.D. = .60, so True variance = .60^2 = .36

Model RMSE = .58, so Error variance = .58^2 = 0.3364

So, Observed Variance = .36 + .3364 = 0.6964. Then Observed S.D. = 0.6964^0.5 = 0.8345 - please compare this value with the Person S.D. in Winsteps Table 3.1. They should be the same.

Now, let's use these numbers to compute the reliability of a good 18-item test in this situation:

True variance = 0.36

Error variance of a test with 18 items with p-values around .7 (estimated from your = 1 / (18 * .7 * (1-.7)) = 0.264550265

So, expected reliability = (true variance) / (true variance +- error variance) = (0.36)/(0.36+0.2646) = 0.58

We see that you observed reliability is around 0.50, but a well-behaved test of 18 items with your sample would be expected to produce a reliability near 0.58.

This suggests that some of the 18 items are not functioning well, or that we need 18 * 0.3364 / 0.2646 = a test of 23 items like the ones on the 18-item test, if we want to raise the reliability of this test from 0.5 to 0.58.

To improve the 18 items, the first place to look in Winsteps is "Diagnosis menu", "A. Polarity". Look at the list of items. Are there any negative or near-zero point correlations? Are then any correlations that are much less than there expected values? These items are weakening the test. Correcting these items is the first priority.