Old Rasch Forum - Rasch on the Run: 2008

Rasch Forum: 2006

Rasch Forum: 2007

Rasch Forum: 2009

Rasch Forum: 2010

Rasch Forum: 2011

Rasch Forum: 2012

Rasch Forum: 2013 January-June

Rasch Forum: 2013 July-December

Rasch Forum: 2014

Current Rasch Forum

161. Using RASCH to determine uni-dimensionality

pjiman1 June 18th, 2008, 8:22pm:

Greetings Mike

I really appreciate your assistance in the past.

I have questions about determining uni-dimensionality.

From what I read on the boards there are a couple of rules of thumb for dimensionality.

Background:

I am in the field of social and emotional competencies. In general, it is not surprising to see the same construct either defined in different ways, multiple constructs with similar items, or constructs with overlapping definitions. For example, what is the difference between self-control, self-management, self-discipline? In my opinion, it appears that different operationalizations of a construct are used depending on the lens that a research adopts. Self-control is usually talked about when discussing anger outbursts, self-management is sometimes defined as handling stress, controlling impulses, and motivating oneself to persevere in overcoming obstacles to goal achievement, etc. In some ways, the overlap among these constructs is fine, as long as the purpose of the construct is clear.

RASCH assumes uni-dimensionality of the measure, not of the data (i.e. the person's responses). Uni-dimensionality of the measure is cleaner for interpretation. However, from what I gathered, it is not possible to prove that a measure is uni-dimensional, but we can gather enough evidence to argue that a measure is uni-dimensional.

Is the following correct - RASCH is concerned with establishing the uni-dimensionality of the measure. That means that RASCH will determine if a set of items is measuring one math skill.

I have a measure of social-emotional constructs for the lower elementary grades. It has 19-items, each item is scored yes or no. It is a student self-report measure. Higher scores indicate higher levels of the social-emotional construct. There are several constructs being measured, and (unfortunately) 1 or 2 items per domain of social and emotional construct. N = 1571

I am trying to establish the uni-dimensionality of the measure. The findings are:

SUMMARY OF MEASURED (NON-EXTREME) Persons

+-----------------------------------------------------------------------------+

| RAW MODEL INFIT OUTFIT |

| SCORE COUNT MEASURE ERROR MNSQ ZSTD MNSQ ZSTD |

|-----------------------------------------------------------------------------|

| MEAN 33.4 18.6 1.94 .73 1.00 .1 .94 .1 |

| S.D. 3.4 1.2 1.06 .19 .27 .8 .72 .8 |

| MAX. 37.0 19.0 3.50 1.57 2.05 3.2 9.54 3.4 |

| MIN. 7.0 4.0 -1.71 .53 .21 -2.3 .14 -1.9 |

|-----------------------------------------------------------------------------|

| REAL RMSE .79 ADJ.SD .71 SEPARATION .90 Person RELIABILITY .45 |

|MODEL RMSE .75 ADJ.SD .75 SEPARATION 1.00 Person RELIABILITY .50 |

| S.E. OF Person MEAN = .03 |

+-----------------------------------------------------------------------------+

SUMMARY OF 19 MEASURED (NON-EXTREME) Items

+-----------------------------------------------------------------------------+

| RAW MODEL INFIT OUTFIT |

| SCORE COUNT MEASURE ERROR MNSQ ZSTD MNSQ ZSTD |

|-----------------------------------------------------------------------------|

| MEAN 2765.1 1541.4 .00 .08 .99 .1 .94 -.1 |

| S.D. 242.0 9.8 1.22 .03 .07 1.9 .23 2.7 |

| MAX. 3058.0 1554.0 2.43 .15 1.11 4.7 1.29 4.5 |

| MIN. 2170.0 1514.0 -2.08 .06 .89 -2.2 .53 -3.2 |

|-----------------------------------------------------------------------------|

| REAL RMSE .09 ADJ.SD 1.22 SEPARATION 13.55 Item RELIABILITY .99 |

|MODEL RMSE .09 ADJ.SD 1.22 SEPARATION 13.66 Item RELIABILITY .99 |

| S.E. OF Item MEAN = .29 |

+-----------------------------------------------------------------------------+

One rule - MnSq is not a good indicator of deviation from uni-dimensionality, so the findings here will not help me. Although, the extremely low person separation and reliability figures are worrisome.

Second rule - Principal contrasts are better for examining dimensionality.

STANDARDIZED RESIDUAL VARIANCE SCREE PLOT

Table of STANDARDIZED RESIDUAL variance (in Eigenvalue units)

Empirical Modeled

Total variance in observations = 38.7 100.0% 100.0%

Variance explained by measures = 19.7 50.9% 48.9%

Unexplained variance (total) = 19.0 49.1% 100.0% 51.1%

Unexplned variance in 1st contrast = 1.8 4.8% 9.7%

Unexplned variance in 2nd contrast = 1.4 3.5% 7.1%

Unexplned variance in 3rd contrast = 1.2 3.2% 6.5%

Unexplned variance in 4th contrast = 1.2 3.0% 6.1%

Unexplned variance in 5th contrast = 1.1 2.9% 5.9%

The unexplained variance is practically equal to variance explained. That seems to indicate that there is a lot of noise in this data, not necessarily the measure. Is this a correct interpretation?

Third rule - I should examine the contrasts and a size of 2.0 and a noticeable ratio of first contrast to measure-explained variance. The ration is 4.8 to 50.8 = .09. This seems good.

I assume that contrast refers to the eigenvalue is this correct? If so the eigenvalue of the first contrast is 1.8. Using a horseshoes and in grenades viewpoint, this value sounds like it be cause for concern that there is multi-dimensionality.

Fourth rule - at least 40% variance in the explained and less than 20% in the next component. The output above clearly meets this criteria.

Fifth rule - examine the cluster of contrasting items for change. I viewed the contrast loadings for the 1st contrast and the second contrast. At the strictest level, there is change in the item clusters. At a relaxed level, some of the items are clustered, some are not. Overall there does appear to be change.

Final rule - does the degree of multi-dimensionality matter? are the differences important enough that the measure has to be separated into two measures?

In my case, it is not uncommon for social and emotional constructs to be conceptually related to each other. Just like a story math problem employs both math and reading skills, positive social relationships involves successful management of emotions. So perhaps the degree of multi-dimensionality does not matter in my case.

Moreover, I did a confirmatory factor analysis of the scale. A one factor model did not fit the data. After tinkering, a three factor model did fit, but with interfactor correlations of .42, .48, .70, which suggests to me that a multi-factor model fits the data and that the factors are related to each other. The exploratory factor analysis did suggest that a one-factor solution was clearly not present and that a 2 or 3 factor solution was more viable.

As I think about this, factor analysis examines the multi-dimensionality of the data, whereas RASCH examines multi-dimensionality of the measure. So it should not be surprising that factor analysis and RASCH dimensionality analysis do not converge because they are examine dimensionality in different ways. In fact, if a person does fit the measure, person measure will likely show up as a misfit rather than as possible evidence that the measure is multi-dimensional. Am I correct?

So after all this, my conclusion is that while there may be multiple factors in the data, from a measurement perspective, it is a one-dimensional measure. It is simpler to analyze and use the measure as a single dimension and I have enough RASCH support to back my conclusion.

However, the greater point in my case is the poor person separation and reliability and I need to go back to the drawing board to get better person measures. Additional, self-report of social and emotional constructs at the early elementary student level are generally known to be difficult, so I might end up scrapping this effort.

Am I correct?

Thanks for wading through this.

Pjiman1

MikeLinacre:

Thank you for your post and your questions, Pjiman1. Very perceptive!

Strictly unidimensional data do not exist in the empirical world. Even straight lines have widths as soon as we draw them.

So a central question is "How close to unidimensional is good enough for our purposes?"

For an arithmetic test, the administrators are happy to combine addition, subtraction, multiplication, division into one measure. But a diagnostician working with learning difficulties may well consider them four different dimensions of mental functioning, producing four different measures.

But statistics can be helpful in making decisions about multidimensionality.

1a. Mean-squares. You are correct.Individual item and person fit statistics are usually too much influenced by local accidents in the data (e.g., lucky guesses) to be strongly indicative of secondary dimensions.

1b. Low reliability. Not indicative of multidimensionality, but indicative of either a test that has too few items for your purposes (only 19), or a sample with not enough performance spread.

2. Unexplained variance = Explained variance. Again an indication of narrow spread of the measures. The wider the spread of the measures, the more variance explained. Not an indicator of multidimensionality.

3. Simulation studies indicate that, for data generated to fit a Rasch model, the 1st contrast can have an eigenvalue of up to 2. This is 2-items strength. A secondary "dimension" with a strength of less than 2 items is very weak.

4. These percents may apply to someone's data, but in general the "Modeled" column tells you what would happen in your data if they fit the Rasch model perfectly. In fact your measures appear to be over-explaining the variance in the data slightly (50.9% vs. 48.9%), suggesting that the data are in some way constrained (not enough randomness), e.g., by a "halo effect" in the responses.

5. Look at the 1st Contrast. Compare the items loading one way (at the top) against the items loading the other way (at the bottom). Is the substantive difference between the items important? This would be the first sub-dimension in the data. If it is important, act on it.

If the 1st Contrast is important, then the second contrast may be worth inspecting etc.

6. "does the degree of multi-dimensionality matter?" - The crucial question. One way is to divide the items into two instruments, one for each sub-dimension. Estimate the person measures and cross-plot them. Does the difference in the person measures matter? (Remember that the standard errors of your "split" person measures will be large, about 1.1 logits).

You concluded: "I need to go back to the drawing board to get better person measures."

Assuming your person sample are representative, then your instrument needs more items and/or your items need longer rating scales (more categories).

If you doubled the length of the instrument from 19 to 38 items, then its reliability would increase from about 0.5 to about 0.7. So it does look as though precise measurement at an individual-person level will be difficult to obtain. But your instrument does produce usefully precise measures for group-level reporting.

pjiman1:

Thanks Mike, most helpful.

Two questions:

You stated - "If you doubled the length of the instrument from 19 to 38 items, then its reliability would increase from about 0.5 to about 0.7. So it does look as though precise measurement at an individual-person level will be difficult to obtain. But your instrument does produce usefully precise measures for group-level reporting."

You stated this because the person reliability could probably only go to .7 and concluded that precision at the individual-person level would be difficult to obtain. What value for person reliability or what other output would you need for you to say that precision at the individual person level was achieved?

Second, what RASCH output values did you use to conclude that the instrument has useful precise measures for group-level reporting? I never thought it was possible that an instrument could be useful for group-level reporting but not individual level reporting. What does this mean in a practical sense? What kind of conclusions could I draw from an instrument that has good group-level precision but not individual level precision.

Finally, I am beginning to think that my thinking about dimensionality has a serious flaw because I thought that dimensionality has the same properties in RASCH as it is discussed in a factor model. I originally thought that I should establish dimensionality for a scale by first using a confirmatory factor analysis to see what is the factor structure. Based on the inter-factor correlations, I could determine if each factor in the model is uni-dimensional. I could also explore alternative factor models using exploratory factor analysis and see if there are factors that are uni-dimensional. Based on the factor model, I would have conducted a RASCH analysis on just those items that load on each of the uni-dimensional factors. So if my CFA had a 3 -factor model, and each was uni-dimensional, I would run three separate RASCH models, one for each dimension. Based on this and other discussions, I am starting to think my thinking is wrong. It is an error to think that dimensionality in RASCH has the same properties as in a factor model. So, say my factor model has three factors with moderate intercorrelations, that says nothing about the dimensionality of the measure in the RASCH world and if RASCH says that the measure (not the data) is uni-dimensional, then it is.

I am now beginning to wonder if RASCH analysis and factor analysis should be used in conjunction with each other. I learned about scale analysis using the factor model. Is there any advantage to using the results obtained from a factor analysis to inform the results obtained from a RASCH? Or should RASCH be the only analysis that matters when investigating the measurement properties of a scale?

Thanks again for reading through this as I try to untangle my thoughts on this topic.

pjiman1

MikeLinacre:

Let's see if these answers will help you, Pjiman1.

You asked: "What value for person reliability or what other output would you need for you to say that precision at the individual person level was achieved?"

To reliabily differentiate between high and low performers in your sample, a test needs a reliability of 0.8. If you are only concerned to discriminate between very high and very low performers, than 0.7.

You asked: "Group level reporting".

If we summarize many measures all imprecise, then the summary, e.g., the mean, can have precision much greater than the precision of any of the individual measures. We apply this every time we measure several times and then base our decisions on the average.

You asked: "Factor analysis and Rasch"

CFA or PCA of the original observations are difficult to align with Rasch dimensionality for several reasons. Here are some

1. The original observations are non-linear. This curvature will distort the factor structure.

2. Unevenness in the distributions of persons or items will produce spurious factors, see "Too many factors" www.rasch.org/rmt/rmt81p.htm

3. If you are using rotation or obliqueness in your CFA, then the variance structure is no longer that in the data, so the factor structure will not match the orthogonal, unrotated variance structure in the Rasch analysis.

4. In CFA, some variance is not allocated to the factors. Rasch corresponds more closely to PCA in which all variance is allocated.

pjiman1:

Mike, thank you again for your invaluable comments.

Final question - should factor analysis and RASCH analysis be used in conjunction with each other to examine scale properties? The reason I ask is that most audiences would want to see results from a factor analysis because that is what they are used to seeing. I am starting to think that perhaps I should skip reporting results from a factor analysis and just report RASCH analysis results.

Thanks for letting me ask this final one.

Peter

MikeLinacre:

Communication of our findings is our biggest challenge, Pjiman1.

If your audience is convinced by factor analysis, then please report it.

A basic rule in science is that reasonable alternative methods should produce the same findings. If they don't, then we need to examine whether the findings are really in the data or merely artifacts of the methods. So if Rasch and factor analysis come to different conclusions about the data, then more thought is definitely necessary. Explaining the reasons for the different conclusions could be the most valuable feature of your research report.

pjiman1:

Sounds good to me. THANK YOU!!!

Pjiman1

mve:

Hi. This thread is very useful. From what I understand, simulation studies indicate that eigenvalues less than 1.4 most likely indicats unidimensionality. In my case, the 1st contrast has an eigenvalue of 3.0. Thus, I would like to look at the 1st contrast and compare the items loading one way against the items loading the other way (looking at loading factors greater than +-0.3). Here is where I get stuck. How do you do this? From what I found in previous literature, it seems that you need to do a paired t-test of the person estimates between the positive subset and the person estimates from all items. Then do the same for the negative subsets. If this is correct, my question is: What is it meant by person estimate? Which Winsteps Table will provide me with person estimate values? Sorry if this sounds to obvious but I've been thinking about it and don't seem to find the answer.

Also, when you said estimate the person measures and cross-plot them. How do you do this in Winsteps? Once again, thanks in advance for your help. Marta

MikeLinacre:

Thank you for this question, Marta.

"eigenvalues less than 1.4 most likely indicates unidimensionality"

If the first eigenvalue is much smaller than 1.4, then the data are "too good" (not random enough in a Rasch conforming way). Examine the data to be sure that there is not something else distorting the randomness. For instance, systematic missing data. We expect the first contrast to be somewhere between 1.4 and 2.0.

"I would like to look at the 1st contrast and compare the items loading one way against the items loading the other way"

Yes, the first step is to identify the substantive content of the contrast. What is this contrast? Is it meaningful, or only an accident of the sample? Is it important to you, or is it something you are prepared to ignore? For instance, on a language test, some items will be relatively easier for native-language speakers (e.g., colloquialisms) but other items will be relatively easier for second-language speakers (e.g., words with similarities to their native speakers). This could be the first contrast in the items. Is this "native-second" dimension important? If we are using the language test to screen job applicants, it probably is not. We only want to know "does this person have high or low language proficiency?" But you may decide to add or omit items which favor one group of speakers.

"a paired t-test of the person estimates"

I'm not familiar with this approach, but here's how to do it in Winsteps

You have your positive subset of items and your negative subset of items (two lists).

Edit your Winsteps control file.

Put a "+" sign in column 1 of all the item labels for the positive items

Put a "-" sign in column 1 of all the item labels for the negative items

Put a "#" sign in column 1 of all the other items

Save your control file.

Analyze your control file.

Produce Table 23. You should see all "+" items at one end, the "-" items at the other end, and the "#" items in the middle.

That confirms everything is correct.

1. Paired t-tests for each person in the sample (perhaps a huge list).

Produce Winsteps Table 31 (differential person functioning) specifying column 1 in the item labels (+,-,#). The numbers you want are in Table 31.3

2. Paired t-test for the subsets of measures.

Copy-and-paste the relevant measures from Winsteps Table 31.3 into Excel ("Text to columns" is the Excel function you will probably want to use).

One column for "Baseline Measure", one column for "+ DPF Measure", one column for "- DPF Measure". Instruct Excel to perform paired t-tests on the three columns.

"cross-plot them."

You can cross-plot with Excel, but the Winsteps scatterplot (Plots menu) may be more useful. Perform separate analyses using ISELECT= for the two sets of items. Write out PFILE= person files. Then, in an analysis of all the data, use the Winsteps scatterplot option to cross-plot each PFILE against the person measures for all the items.

Does this meet your needs, Marta?

mve:

Hi Mike! Your reply is exactly what I needed! Thanks a lot for helping me (and others in this forum). Unfortunately, every answer creates further questions... Can I clarify the following points?

1. When dividing the items as "+", "-", or "#", I guess is up to me whether or not to use a cut-off for the loadings (e.g. only consider + those with loadings more than 0.3).

2. When I create the Excel Table with 'Baseline', 'DPF+' and 'DPF-' columns I have some values with maximum or minimum score (e.g. 3.56>). To perform the t-tests I have removed the > or < signs. I guess this will be fine...

3. When we cross-plot with Winsteps, I have written a PFILE= for all the data, another PDFILE when re-running the analysis for ISELECT="-" and another PDFILE for ISELECT="+". Then we compare whether the graphs that we get are similar or not for:

person measures PFIFLE(all) vs PFILE(+)

and

person measures PFILE (all) vs PFILE (-)

If no major differences seen, again it suggests unidimensionality. Is this right?

4. I suppose that if the first contrast suggest unidimensionality, there is no need to further examine the remaining contrasts? In my case there is 19.8 variance explained by measures and 21 unexplained variance (with 5 contrasts).

5. A final question, I understand that the aim of analysing PCA of the residuals is to establish whether the responses to the items of the questionnaire can be explained by the Rasch model or whether there are any further associations between the resiudals (Table 23). However, I can not fully understand the conceptual difference between this analysis and the info that we can get if we ask for Table 24.

Once again, thanks for your help and sorry for being so inquisitive. Marta

MikeLinacre:

Yes, one set of questions leads to another, Marta.

1. "loadings more than 0.3"

Better to look at the plot in Table 23.2 and see how the items cluster vertically. Often there are 2 or 3 outlying items vs. everything else. Then the contrast dimensions is those items vs. everything else. There are no neutral items.

2. "I have removed the > or < signs"

Yes, but these signs warn you that the values next to them are very uncertain.

3. "If no major differences seen, again it suggests unidimensionality"

It really suggests, "if there is multidimensionality, it is too small to have any meaningful impact on person measurement". So, we may see two types of items in the contrast-plot, but their difference, though statistically significant, does not have a substantive impact on person measurement.

4. "there is no need to further examine the remaining contrasts?"

Look at least one more. (Winsteps always reports 5). Sometimes the first contrast is a small effect among many items, but the second contrast is a big effect among a few items. The big effect may be more important to you than the small effect.

5. Table 23 (item dimensionality) and Table 24 (person dimensionality) are looking at the same data (and the same residuals) from different perspectives.

Table 23 is investigating "Are there two or more types of items?"

Table 24 is investigating "Are there two or more types of persons?"

Usually the two interact: one type of person does better on one type of item, but another type of person does better on another type of item.

Since there are usually many more persons than there are items, it is easier to start by looking at the items. Often we can see the dimensionality pattern so clearly from the items that there is no need to look at the persons.

mve:

Mike, Thanks for all your help. I'm working on everything we've recently been discussing. Marta

OlivierMairesse:

Hi fellow Rasch enthusiasts. I've had a nice e-mail conversation with Mike about multidimensionality, CFA, etc. and he agreed to let me publish it on this forum. Hope this helps! It sure helped me.

QUESTION: In a study to determine the factors influencing car purchase decisions, we asked 1200 people to rate how important they find a particular car attribute in their purchase decision. We used 17 items (e.g. perfomance, design, fuel consumption,...) all to be rated on a 7-point scale. Our results show a strong “bias” towards the end of the response scale (probably meaning that most of the respondents find all attributes important). Performing a PCA (and intentionally violating the assumptions) we found a 5-factor model accounting for about 60% of the variance. Those 5-factors are very meaningfull from an empirical point of vue (e;g; fuel cost, purchase cost and life-cycle costs loading strongly on the same factor with very low crossloadings). However, our response scale was far from linear. I thought it would be a good idea to assess the linearity of the measures by means of the rating scale model. The data showed indeed the need to collapse categories and after a few combinations I found that a 3-point scale would do the job very well. After recoding the data, I redid a PCA. Again, I found comparable factors explaining 56% of the variance. The 5 factors now make even more sense and the loadings are very satisfactory. I was just wondering if the procedure I proposed is a sensible one, or is my rationale hopelessly flawed?

REPLY: This sounds like a situation in which your respondents are being asked to discriminate more levels (7) of importance than they have inside them (3). It probably makes sense to offer them 7 levels, so they think carefully about their choice. But the analysis of 3 levels probably matches their mental perception better.

This is a common situation when persons are asked to rate on long rating scales. For instance, "on a scale from 1 to 10 ....". Few people can imagine 10 qualitatively different levels of something. Similarly for 0-100 visual-analog scales. Collapsing (combining) categories during the analysis nearly always makes the picture clearer. It reduces the noise due to the arbitrary choice by the respondent between categories which have the same substantive meaning to that respondent.

QUESTION: I was more concerned about the fact that you use -please correct me if I’m wrong- a model (rating scale model) that assumes 1 continuous underlying factor to adapt you response scale, while afterwards you perform an analysis searching for more dimensionality in the data.

I believe it is conceivable that the use of the response scale can be dependent on the dimension one is investigating... But I also remember having read somewhere that multidimensionality can be validated by performing a FA on standardized Rasch-residuals, so I’ll pursue that way!

REPLY: Yes, this is also the manner in which physical science proceeds. They propose a theory, such as the "top quark", and then try to make the data confirm it. If the data cooperate (as it did with the "top quark") then the theory is confirmed. If the data cannot be made to cooperate (as with Lysenkoism), then the theory is rejected. In Rasch methodology, we try to make the data confirm that the variable is unidimensional. If the data do not cooperate (using, for instance, analysis of the residuals from a Rasch analysis), then the variable is demonstrated to be multidimensional. We then try to identify unidimensional pieces of the data, and we also investigate whether the multidimensionality is large enough to bias our findings.

QUESTION: Lately, I have been reading on the multidimensionality issue in the Winsteps forum, in the Winsteps help files and in Bond&Fox. Also, your previous emails have helped a great deal.

Still, I seem to struggle a little bit with the interpretation of some of the data.

As it was not my primary concern of constructing a unidimensional instrument to measure importance of car attributes in a purchase decision, I am not surprised that my data do not support the Rasch model.

My main concern is, can I use contrast-loadings of items as a basis for constructing latent factors for a confirmatory factor-analysis?

But, maybe I should display some results to illustrate up my question.

First I did recode the 7-point response scale into a 3-point scale with satisfactory scale diagnostics. Second, I take from horizontal arrangement the pathway analysis that the item difficulties are somewhat similar but that the majority of the items do not fit an unidimensional measure. This is not necessarily bad for me...

This is also confirmed with the following table I guess:

--------------------------------------------------------------------------------------------------------

|ENTRY TOTAL MODEL| INFIT | OUTFIT |PT-MEASURE |EXACT MATCH| |

|NUMBER SCORE COUNT MEASURE S.E. |MNSQ ZSTD|MNSQ ZSTD|CORR. EXP.| OBS% EXP%| Item |

|------------------------------------+----------+----------+-----------+-----------+-------------------|

| 1 2229 939 37.34 .59|1.39 8.5|1.54 9.9| .27 .45| 53.8 60.5| Purchase price |

| 7 1279 935 69.32 .69|1.35 6.7|1.43 6.2| .39 .47| 68.2 70.9| Origin |

| 3 1779 935 51.36 .56|1.16 3.8|1.20 4.5| .40 .50| 54.3 58.9| Design |

| 9 1654 932 55.16 .57|1.17 3.9|1.16 3.8| .43 .50| 53.4 58.3| Ecological aspects|

| 8 1366 937 65.75 .64|1.17 3.7|1.16 2.9| .43 .49| 63.9 66.0| Brand image |

| 16 1863 934 48.70 .56|1.09 2.2|1.11 2.5| .47 .50| 56.8 59.2| Type |

| 2 2323 936 33.75 .61| .79 -5.4| .85 -3.1| .49 .43| 69.6 62.6| Reliability |

| 15 2185 931 38.21 .58| .97 -.7| .95 -1.1| .50 .46| 60.8 59.9| Fuel costs |

| 12 1589 934 57.47 .58|1.04 .9|1.03 .7| .51 .50| 59.6 58.9| Performance |

| 5 1532 936 59.50 .59|1.17 3.9|1.18 4.0| .52 .50| 56.2 59.8| Dealer |

| 10 1922 935 46.95 .56| .82 -4.6| .84 -4.1| .52 .49| 68.1 59.4| Maintenance costs |

| 17 1789 935 51.09 .56| .88 -3.1| .88 -3.0| .54 .50| 62.9 59.0| Size |

| 14 2258 936 36.09 .59| .84 -4.1| .80 -4.4| .55 .45| 65.0 60.4| Safety |

| 13 1937 936 46.55 .56| .86 -3.6| .85 -3.7| .55 .49| 63.8 59.5| Space |

| 11 1666 935 54.98 .57| .77 -6.1| .77 -6.0| .56 .50| 69.4 58.4| Options |

| 4 1914 937 47.32 .56| .71 -7.9| .71 -7.6| .57 .49| 69.9 59.4| Ergonomy |

| 6 1812 937 50.47 .56| .96 -1.1| .94 -1.4| .58 .50| 60.1 59.1| Warranty |

|------------------------------------+----------+----------+-----------+-----------+-------------------|

| MEAN 1829.2 935.3 50.00 .58|1.01 -.2|1.02 .0| | 62.1 60.6| |

| S.D. 293.8 1.8 9.73 .03| .20 4.7| .23 4.6| | 5.6 3.1| |

--------------------------------------------------------------------------------------------------------

I am not really inclined to delete items from the list as they all seem to misfit the Rasch model (except for warranty and fuel costs). Which, again, in my case is not necessarily bad.

However, deleting items based on infit/outfit MNSQ only (ignoring ZSTD) could serve my purpose later on, but I am wondering if it is allowed to proceed this way?

Despite not being a proof of multidimensionality in the measure, it could on the other hand be an indication of multidimensionality in the data, or am I mistaken?

Third, from the following dimensionality map I presume that what I expected (multidimensionality) is (partially) confirmed:

Table of STANDARDIZED RESIDUAL variance (in Eigenvalue units)

-- Empirical -- Modeled

Total raw variance in observations = 27.7 100.0% 100.0%

Raw variance explained by measures = 10.7 38.6% 38.5%

Raw variance explained by persons = 5.3 19.1% 19.1%

Raw Variance explained by items = 5.4 19.5% 19.4%

Raw unexplained variance (total) = 17.0 61.4% 100.0% 61.5%

Unexplned variance in 1st contrast = 2.7 9.9% 16.1%

Unexplned variance in 2nd contrast = 1.7 6.3% 10.2%

Unexplned variance in 3rd contrast = 1.4 5.1% 8.3%

Unexplned variance in 4th contrast = 1.4 4.9% 8.0%

Unexplned variance in 5th contrast = 1.2 4.2% 6.9%

---------------------------------------------------------------

|CON- | | INFIT OUTFIT| ENTRY |

| TRAST|LOADING|MEASURE MNSQ MNSQ |NUMBER Item |

|------+-------+-------------------+--------------------------|

| 1 | .64 | 51.36 1.16 1.20 |A 3 Design |

| 1 | .56 | 65.75 1.17 1.16 |B 8 Brand image |

| 1 | .51 | 57.47 1.04 1.03 |C 12 Performance |

| 1 | .40 | 69.32 1.35 1.43 |D 7 Origin |

| 1 | .32 | 48.70 1.09 1.11 |E 16 Type |

| 1 | .27 | 47.32 .71 .71 |F 4 Ergonomy |

| 1 | .13 | 54.98 .77 .77 |G 11 Options |

| 1 | .08 | 51.09 .88 .88 |H 17 Size |

| 1 | .04 | 59.50 1.17 1.18 |I 5 Dealer |

| |-------+-------------------+--------------------------|

| 1 | -.63 | 38.21 .97 .95 |a 15 Fuel costs |

| 1 | -.60 | 46.95 .82 .84 |b 10 Maintenance costs |

| 1 | -.51 | 55.16 1.17 1.16 |c 9 Ecological aspects |

| 1 | -.37 | 36.09 .84 .80 |d 14 Safety |

| 1 | -.37 | 37.34 1.39 1.54 |e 1 Purchase price |

| 1 | -.23 | 33.75 .79 .85 |f 2 Reliability |

| 1 | -.16 | 50.47 .96 .94 |g 6 Warranty |

| 1 | -.16 | 46.55 .86 .85 |h 13 Space |

---------------------------------------------------------------

---------------------------------------------------------------

|CON- | | INFIT OUTFIT| ENTRY |

| TRAST|LOADING|MEASURE MNSQ MNSQ |NUMBER Item |

|------+-------+-------------------+--------------------------|

| 2 | .75 | 51.09 .88 .88 |H 17 Size |

| 2 | .59 | 48.70 1.09 1.11 |E 16 Type |

| 2 | .55 | 46.55 .86 .85 |h 13 Space |

| 2 | .13 | 37.34 1.39 1.54 |e 1 Purchase price |

| 2 | .02 | 38.21 .97 .95 |a 15 Fuel costs |

| |-------+-------------------+--------------------------|

| 2 | -.41 | 50.47 .96 .94 |g 6 Warranty |

| 2 | -.37 | 59.50 1.17 1.18 |I 5 Dealer |

| 2 | -.23 | 69.32 1.35 1.43 |D 7 Origin |

| 2 | -.21 | 65.75 1.17 1.16 |B 8 Brand image |

| 2 | -.19 | 33.75 .79 .85 |f 2 Reliability |

| 2 | -.14 | 51.36 1.16 1.20 |A 3 Design |

| 2 | -.14 | 36.09 .84 .80 |d 14 Safety |

| 2 | -.12 | 46.95 .82 .84 |b 10 Maintenance costs |

| 2 | -.07 | 47.32 .71 .71 |F 4 Ergonomy |

| 2 | -.07 | 55.16 1.17 1.16 |c 9 Ecological aspects |

| 2 | -.06 | 54.98 .77 .77 |G 11 Options |

| 2 | -.06 | 57.47 1.04 1.03 |C 12 Performance |

---------------------------------------------------------------

From these data I could be inclined to recognize three plausible dimensions in the measure. The + items in the first contrast describe something like “driver experience or status aspects”, the - items something like “life cycle costs”. In the second contrast the + items describe something like “practical aspects”, while the - items describe something related to the “dealer”.

Based on the remaining items the Rasch dimension, one could tentatively describe this as a “decency-luxury dimension”, (going from safety/reliability to option list/ergonomy/comfort)

The second dimension could be a “budget dimension” where people which are concerned with their budget find fuel and maintenance costs important, while on the other hand endorsers of perfomance and design items are less concerned about budget issues.

A third dimension could be something of a “supplier or car-specific dimension”. People concerned with practical specs of their vehicle could be less concerned about where it actually comes from.

I tried to incorporate those dimensions into a SEM (CFA in LISREL). Not surprisingly I had a bad fit of the data (when the model actually converged...). Especially combining the contrast-items of the first contrast proved to be a bad idea.

I believe this was because of my initial misunderstanding of FA trying to find multidimensionality in the data (even when the scores are “linearized”) whereas RaschFA seeks to explain departures from unidimensionality in the measure.

However, when using the contrasting items to construct latent factors, I have a very good fit of the data to the hypothesized model. (See graph below). Coincidence? I guess not, but this made me wonder what the nature of the relationship between CFA and RFA actually is....

So again, from a practical point of view I am curious if analyzing contrast-loadings of items can be used as a basis for constructing latent factors for a confirmatory factor-analysis? Also, it seems that omitting items with infit/outfit MNSQ > 1.4 (regardless of the ZSTD) serves the CFA a great deal in terms of fit. Do you have any idea why that is, because I expected it to be related to the testing of an unidimensional construct.

REPLY: You wrote: As it was not my primary concern of constructing a unidimensional instrument to measure importance of car attributes in a purchase decision, I am not surprised that my data do not support the Rasch model.

Reply: Do not despair! Perhaps your data do accord with the Rasch model for the most part. Let us see ...

Comment: Carefully constructed and administered surveys, intended to probe one main idea, nearly always accord with the Rasch model. If they don't, then that tells us our "one main idea" is not "one main idea", but more like a grab bag of different ideas, similar to a game of Trivial Pursuit.

You wrote: My main concern is, can I use contrast-loadings of items as a basis for constructing latent factors for a confirmatory factor-analysis?

Reply: Yes.

You wrote: First I did recode the 7-point response scale into a 3-point scale with satisfactory scale diagnostics:

Reply: Take another look at your 7-point scale. I suspect your sample can discriminate 5 levels. But with your large sample size, any loss of statistical information going from 5 categories to 3 categories probably doesn't matter.

You wrote: Second, I take from horizontal arrangement the pathway analysis that the item difficulties are somewhat similar but that the majority of the items do not fit an unidimensional measure. This is not necessarily bad for me...

Reply: That plot may be misleading to the eye. Perhaps it has squashed the y-axis and stretched the x-axis. Edward Tufte and Howard Wainer have published intriguing papers on the topic of how plotting interacts with inference.

You wrote: This is also confirmed with the following table I guess:

Reply: Yes the plot is constructed from the table. But notice your sample sizes are around 2,000. These sample sizes are over-powering the "ZSTD" t-test. See the plot at https://www.winsteps.com/winman/index.htm?diagnosingmisfit.htm - meaningful sample sizes for significance tests are 30 to 300.

So ignore the ZSTD (t-test) values in this analysis, and look at the MNSQ values (chi-squares divided by their d.f.). Only one MNSQ value (1.54) is high enough to be doubtful. And even that does not degrade the usefulness of the Rasch measures (degradation occurs with MNSQ > 2.0).

Overall your data fit the Rasch model well.

--------------------------------------------------------------------------------------------------------

|ENTRY TOTAL MODEL| INFIT | OUTFIT |PT-MEASURE |EXACT MATCH| |

|NUMBER SCORE COUNT MEASURE S.E. |MNSQ ZSTD|MNSQ ZSTD|CORR. EXP.| OBS% EXP%| Item |

|------------------------------------+----------+----------+-----------+-----------+-------------------|

| 1 2229 939 37.34 .59|1.39 8.5|1.54 9.9| .27 .45| 53.8 60.5| Purchase price |

You wrote: I am not really inclined to delete items from the list as they all seem to misfit the Rasch model (except for warranty and fuel costs). Which, again, in my case is not necessarily bad.

Reply: Please distinguish between underfit (noise) and overfit (excessive predictability). Anything with a negative ZSTD or mean-square less than 1.0 is overfitting (too predictable) in the statistical sense, but is not leading to incorrect measures or incorrect inferences in the substantive sense.

You wrote: However, deleting items based on infit/outfit MNSQ only (ignoring ZSTD) could serve my purpose later on, but I am wondering if it is allowed to proceed this way?

Reply: Rasch measurement is a tool (like an electric drill). You can use it when it is useful, and how it is useful. That is your choice.

You wrote: Despite not being a proof of multidimensionality in the measure, it could on the other hand be an indication of multidimensionality in the data, or am I mistaken? Third, from the following dimensionality map I presume that what I expected (multidimensionality) is (partially) confirmed:

Table of STANDARDIZED RESIDUAL variance (in Eigenvalue units)

-- Empirical -- Modeled

Total raw variance in observations = 27.7 100.0% 100.0%

Raw variance explained by measures = 10.7 38.6% 38.5%

Raw variance explained by persons = 5.3 19.1% 19.1%

Raw Variance explained by items = 5.4 19.5% 19.4%

Raw unexplained variance (total) = 17.0 61.4% 100.0% 61.5%

Unexplned variance in 1st contrast = 2.7 9.9% 16.1%

Reply: Yes, there is noticeable multi-dimensionality. The variance explained by the first contrast (9.9%) is about half that explained by the item difficulty range (19.5%). The first contrast also has the strength of the unmodeled variance in about 3 items (eigenvalue 2.7). This is more than the Rasch-predicted chance-value which is usually between 1.5 and 2.0.

This Table tells us the contrast is between items like Design ("Sales appeal") and items like Fuel Cost ("Practicality"). This makes sense to us as car buyers

---------------------------------------------------------------

|CON- | | INFIT OUTFIT| ENTRY |

| TRAST|LOADING|MEASURE MNSQ MNSQ |NUMBER Item |

|------+-------+-------------------+--------------------------|

| 1 | .64 | 51.36 1.16 1.20 |A 3 Design |

| 1 | .56 | 65.75 1.17 1.16 |B 8 Brand image |

| 1 | .51 | 57.47 1.04 1.03 |C 12 Performance |

| |-------+-------------------+--------------------------|

| 1 | -.63 | 38.21 .97 .95 |a 15 Fuel costs |

| 1 | -.60 | 46.95 .82 .84 |b 10 Maintenance costs |

| 1 | -.51 | 55.16 1.17 1.16 |c 9 Ecological aspects |

You wrote: From these data I could be inclined to recognize three plausible dimensions in the measure. The + items in the first contrast describe something like “driver experience or status aspects”, the - items something like “life cycle costs”. In the second contrast the + items describe something like “practical aspects”, while the - items describe something related to the “dealer”.

Reply: Yes, but there is also the bigger overall Rasch dimension of "buyability". The contrasts are lesser variations in the data.

You wrote: I tried to incorporate those dimensions into a SEM (CFA in LISREL). Not surprisingly I had a bad fit of the data (when the model actually converged...). Especially combining the contrast-items of the first contrast proved to be a bad idea.

Reply: Rasch and SEM are different perspectives on the data. Rasch is looking for more-and-less amounts (with predicted randomness in the data). SEM is looking for more-and-less correlations (and trying to explain away randomness in the data). So, sometimes the methodologies concur, and sometimes they disagree.

QUESTION: 1) You wrote: “Rasch and SEM are different perspectives on the data. Rasch is looking for more-and-less amounts (with predicted randomness in the data).

SEM is looking for more-and-less correlations (and trying to explain away randomness in the data).”

I am not quite sure what you mean by “amounts”. If I understand correcly this refers to “locations” on a latent Rasch dimension, is it?

So, bridging both CFA and RaschFA, a Rasch dimension may host different “factors” in the ‘traditional’ sense of the term. This makes sense, seeing that instruments like the SCL90 is alleged to have a 9-factor structure while the Rasch analysis uncovers only two dimensions.

Incase of the car survey, items with positive loadings on the 1st contrast (design, brand image, performance) may constitute a latent factor (“driving experience/status”)

and the items with negative loadings (fuel costs, environmental aspects, maintenance costs) another latent factor (“life-cycle costs”). The fact that items cluster is probably because of similar substantive meaning to the respondents, translated in high intercorrelations in the data.

In a Rasch sense, these two factors operate on different (opposing) locations of a secondary Rasch dimension. In the CFA these dynamics seem to be translated in near zero-correlations.

2) You wrote: “Take another look at your 7-point scale. I suspect your sample can discriminate 5 levels”.

Actually I did that before. I went from 7 to 6 to 5 to 4 to 3. Because the nature of the question asked “How important is this car attribute when you purchase a car”, a lot of people scored only in the top 2-3 categories. I had to collapse the original categories 1,2,3 and 4 into ‘1’; 5 and6 into ‘2’ and 7 became ‘3’ in order for each category to represent a distinct categrory of the latent variable.

3) You wrote: “That plot may be misleading to the eye. Perhaps it has squashed the y-axis and stretched the x-axis.” And “These sample sizes are over-powering the "ZSTD" t-test.”

I guess the problem I had with the bubble chart is indeed because of these inflated t-statistics because of the large sample. I should probably dispose of using this chart in my report.

About the sample size sensitivity of the ZSTD t-test in polytomous items, a recent open access paper has been published about this issue. Maybe it could be useful to include this in the manual as well... http://www.biomedcentral.com/content/pdf/1471-2288-8-33.pdf

I was thinking maybe it could be useful to inlcude effect sizes next to the t-test values, so that the guidelines could remain free of sample size issues...

REPLY: This becomes complicated for the Rasch model.

The Rasch model predicts values for SS1 (explained variance) and SS2 (unexplained variance). Empirical SS1 values that are too high overfit the model, and values that are too low underfit the model. The Rasch-reported INFIT statistics are also the (observed SS2 / expected SS2).

Winsteps could report the observed and expected values of SS1/(SS1+SS2). We can obtain the predicted values from the math underlying www.rasch.org/rmt/rmt221j.htm

You wrote: Does the t/sqrt(df) have a similar 0 to 1 range?

Reply: No. It is a more central t-statistic, with range -infinity to +infinity.

The correlation of the square-root (mean-square) with t/sqrt(df) is 0.98 with my simulated data.

So perhaps that is what you are looking for ...

pjiman1:

Hello Mike,

I wish to get your impression of my analysis of dimensionality across three waves.

Task - Analysis of dimensionality of implementation Rubric. Determine if the rubric is uni-dimensional and if this dimensionality remains invariant across waves.

Background - Items are for a rubric for assessing quality of implementation of prevention programs. 16 items, 4 rating categories. Rating categories have their own criteria. Criteria for rating category 1 is different from 2.

Items 1, 2 represent a readiness phase, items 3,4,5,6 represent a planning phase, items 7, 8, 9, 10 represent an implementation phase, items 11, 12, 13, 14, 15, 16 represent a sustainability phase.

Rubric administered at 3 waves

Using a Rating Scale model for the analysis

Steps for determining dimensionality

1. Empirical variance explained is equivalent to Modeled Variance

2. person measure var > item difficulty variance, person measure SD > Item difficult S.D.

3. Unexplained variance 1st contrast, the eigenvalue is not greater than 2.0 (indication of how many items there might be).

4. Variance explained by 1st contrast < variance of item difficulties

5. 1st constrast variance < simulated RASCH data 1st contrast variance, 1% difference between the simulated and actual data is okay, 10% is a red flag.

6. Variance in items is at least 4 times more than the variance in the 1st contrast

7. Variance of the measures is greater than 60%

8. Unexplained variance in the 1st contrast eigenvalue < 3.0, < 1.5 is excellent, and < 5% is excellent

9. Dimensionality plot - look at the items from top to bottom, any difference?

10. cross-plot the person measures

11. Invariance of the scale over time -

a) Stack , items x 3 waves of persons, perform DIF analysis of items vs. wave. We are interested in the stability of the item difficulties (measures) across time. Want to see who (persons) have changed over time as the item difficulties remain stable over time. Use a cross-plot of the persons measures wave 1 by wave 2.

b) Rack - No need to Rack given the current version of Winsteps. Racking the data see what has changed. Analysis might be appropriate here because over time, interventions occurred that impact specific items of the scale. Identify the impact of the activity by racking the items 3 waves of items by persons and seeing the change in item difficulty as an effect of treatment.

Plan - examine steps 1-10 for the measure at each wave. Look at rack and stack across all waves.

results in attached document.

My conclusion is that I have multi-dimensionality. Would you concur? Sorry for the long document.

Pjiman1

MikeLinacre:

Thank you for your post, Pjiman1.

Your list of 10 steps are indicators of multidimensionality, much as a medical doctor might use 10 steps to identify if you have a disease. At end, the doctor will say "No!" or "The disease is ....".

It is this last conclusion that is missing. What is the multidimensionality?

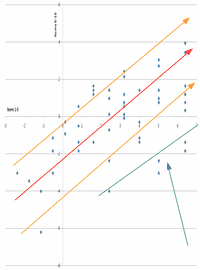

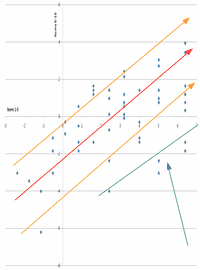

Based on your 23.2, and the item difficulty and misfit statistics, there is a difference between STEPS1-5 and the other items. Is this another dimension or something else?

Your scatterplot on page 34 is instructive. It measures each person on STEPS1-5 (S.E. 1 logit) and on the other items (S.E. .5 logits). So we can conceptualize the confidence intervals on your plot: the 95% confidence band extends about 1.6 logits perpendicularly away from the trend line.

In the plot, my guess at the trendline is the red line. The confidence bands are indicated by the orange lines. The plot is more scattered than the Rasch model predicts, but is it two dimensions? The persons for which the two dimensions would be different are indicated by the green arrow. Those people have much higher measures on the first five items than on the other items. Is this a learning or familiarity effect? Is it a difference in the item dimension or a different style among the persons?

We can think of the same situation on a language test. The green area would be "second-language learners". They are much better at reading than at speaking, compared to the native-language learners of the same overall ability.

Based on this plot, for most purposes this instrument is unidimensional. But if we are concerned about the off-dimensional people, then it is two-dimensional.

Does this make sense, Pjiman1 ?

pjiman1:

Mike,

Your assistance is much appreciated. I am still reviewing your response and my response and I hope to post something soon. Just wanted to thank you for reviewing that large document.

Pjiman1

pjiman1:

Thank you very much for your insights Mike. And as always, like the previous RASCH poster Marta said, every answer leads to more questions...

Your question “what is the dimensionality” is a good one. I suspect that because dimensionality is in some ways, a social construction and based upon the needs of the measurement, the more we can connect the RASCH results with theory, the better we can justify the dimensionality of the scale.

The scale is a school wide prevention program implementation scale. Here are the items:

1. Principal commits to schoolwide SEL

2. Engage stakeholders and form steering committee

3. Develop and articulate shared vision

4. Conduct needs and resources assessment

5. Develop action plan

6. Select Evidence based program

7. Conduct initial staff development

8. Launch SEL instruction in classrooms

9. Expand instruction and integrate SEL schoolwide

10. Revisit activities and adjust for improvement

11. Provide ongoing professional Development

12. Evaluate practices and outcomes for improvement

13. Develop infrastructure to support SEL

14. Integrate SEL framework schoolwide

15. Nurture partnerships with families and communities

16. Communicate with Stakeholders

Items 1 through 10 are supposed to be conducted in sequence. Items 11 through 16 are items that are to be addressed after 1 through 10 are completed.

To me when I review the item content, Steps 1-5 constitute a “planning” dimension. Steps 6 - 10 and 11 through 16 constitute an “action” dimension. These two dimensions fit the theory of prevention program implementation and also theories of learning and adopting new innovations. Usually, when schools adopt new programs, they tend to engage in planning to adopt the new program, but they also try it out and see how it works and from that experience, make adjustments to the program. So there usually is a planning and action phase that takes place at the same time within the school as it adopts a program. The combination of engaging in both phases helps inform the school on how best to adopt the program. The dimensionality could look like this:

(2 program implementation dimensions)

<------------------------------------------------------------------------------------------>

Easy Planning tasks Hard planning tasks

<------------------------------------------------------------------------------------------>

Easy Action tasks Hard Action Tasks

However, based on the scatterplot on page 34 (and thank you for your comment on that plot) you stated - “Based on this plot, for most purposes this instrument is unidimensional. But if we are concerned about the off-dimensional people, then it is two-dimensional.” The 5 persons who scored outside the green line had high person measures on steps 1-5 and very low scores on the remaining items. So it would seem that these 5 persons saw steps 1-5 very differently than steps 6 -10 and items 11-16. Upon examination of the persons that produced these scores, not sure if there are learning or familiarity effects here and not sure if a different style is evident (schools that attend to steps 1-5 more so than other schools). I am sensing that you are wondering if the 2nd dimension of this instrument means anything to those 5 persons who are outside of the 95% confidence band. Based on what I know of those persons, I cannot think of anything that would distinguish those 5 persons on this second dimension. I have no reason to think that the 2nd dimension helps me discriminate between the persons. It could be that those 5 persons (schools) had low scores on the 2nd dimension. In other words, I am not concerned about those 5 persons that are off dimension.

You also said that based on the PCA 23.2 tables, item difficulties and misfit statistics that items 1-5 are a 2nd dimension. So for the most part, there are two dimensions here, but if the persons do not produce scores that suggest that they are using the two dimensions of the scale in notably different ways, then we can say that the scale is uni-dimensional?

Let’s say the two dimensions are planning and action dimensions. If the planning dimension is easiest to do and the action dimension is harder to do, then perhaps this is a one dimensional scale with planning on the easy end and action on the harder end. Like this:

(program implementation dimension)

<----------------------------------------------------------------------------------------->

Planning tasks (Easy) Action tasks (Hard)

I am leaning towards the one dimensional scale for simplicity sake. I can say that there are two dimensions here, but in some ways (based on the scatterplots), the two dimensions do not give me much more information than if I had just a one dimensional scale. So to answer your question - “what is the multi-dimensionality?” I would say that there is a planning and an action dimension, but all the information I need is best served when the two dimensions are together on the same scale.

If this conclusion satisfies your question “What is the multidimensionality?” then I believe my work in establishing the two dimensions of the scale, but treating them as if they are one scale, is done, correct?

Additional questions:

Thank you for your comment on the scatterplot on page 34. You made a guess at the trend line, how did you arrive at that guess? Were the scatterplots for wave 1 (p.9) and wave 2 (p.22) not as instructive or did they not show signs of multi-dimensionality, that is they did not have enough persons that were outside the 95% confidence band.

You mentioned that based on the item difficulties and the misfit statistics, you saw another dimension. What did you see in those stats that helped you come to this conclusion?

Based on the results, do you think there might be additional dimensions within steps 6 -10 and steps 11-16?

Were the rack and stack plots (p. 36 - 39) helpful in any way? Did the Stack plot, with its items locating at beyond the +-2 band at different waves suggest something about the non-invariance of the items across waves? Does it say something about the dimensionality of the measure? Did the Rack plots say something about which items changed from wave to wave? Does it say something about the dimensionality of the measure?

Thanks again for your valuable work and assistance. The message board is a great way to learn about RASCH.

pjiman1

pjiman1:

Hi Mike,

Got a message that you had replied to my latest post on this thread, but when I logged on, I could not find it. Just wanted to let you know in case the reply did not go through properly. Thanks!

pjiman1

MikeLinacre:

Pjiman1, I started to respond, and then realized that you understand your project much better than I do. You are now the expert!

pjiman1:

Thanks Mike for the affirmation. It's nice to know I can proceed.

pjiman1

mathoman:

thank you all

we are folow up your posts

dr.basimaust:

Dear sir, would you answer my question concerning best method to determin unidimenstionality of an five category attitude scale composing 30 items distrubuting on three subscales, each has 10 items. the scale has been applied on 183 students. thank you dr. Basim Al-Samarrai

MikeLinacre:

Thank you for your post, Dr. Basim Al-Samarrai.

In the Winsteps software, Table 23 "dimensionality" is helpful.

https://www.winsteps.com/winman/index.htm?multidimensionality.htm in Winsteps Help suggests a procedure to follow.

sailbot:

I wish this post could be kept at the top. It does seem that many of us have dimensionality questions, and this is probably the best thread on the issue. I found it 1000 time helpful.

rag:

I have found this thread super helpful, thank you Michael and everyone else.

I follow the key differences between the CFA and Rasch PCA methods to assess dimensionality, and why they may converge in some cases and not in others. My question relates to how we identify what is going on when the results diverge dramatically.

I have an assessment that contains 9 dichotomous items with 417 respondents, which hypothetically reflect a single latent construct or dimension. Running it through a Rasch model, and looking at the PCA of the residuals, the first contrast/eigenvalue is 1.4. See results:

Table of STANDARDIZED RESIDUAL variance (in Eigenvalue units)

-- Empirical -- Modeled

Total raw variance in observations = 12.5 100.0% 100.0%

Raw variance explained by measures = 3.5 28.0% 27.7%

Raw variance explained by persons = 1.8 14.0% 13.9%

Raw Variance explained by items = 1.7 14.0% 13.8%

Raw unexplained variance (total) = 9.0 72.0% 100.0% 72.3%

Unexplned variance in 1st contrast = 1.4 11.4% 15.8%

Unexplned variance in 2nd contrast = 1.3 10.5% 14.5%

Unexplned variance in 3rd contrast = 1.2 10.0% 13.9%

Unexplned variance in 4th contrast = 1.1 9.0% 12.5%

Unexplned variance in 5th contrast = 1.0 8.4% 11.6%

This tells me that I've got evidence for a single dimension.

However, when I run through a CFA, the story is very different. I am using the lavaan package in R, which deals with dichotomous items by calculating tetrachoric correlations and then running some form of a weighted least squares algorithm. A single factor model gives me poor fit (RMSEA=0.063, Comparative Fit Index=0.714). The loadings are all over the place, some positive and some very negative. A 2 factor model fits somewhat better, but still has problems.

I should also point out that while KR20 is around 0.6, person-separation reliability is .04, so my data are not the greatest (looking at the item/person map, most items are way below most respondents scores, with 8 of 9 items having a pvalue over 0.80).

From a Rasch perspective, I have evidence for unidimensionality, from a CFA perspective I most certainly do not. My thought is initially that the measures explain a small portion of respondent variance (28%), the remaining variance may not be systematically dependent in any other way, so the PCA of Rasch residuals wouldn't find a high contrast. The CFA, running against the full item variance (rather than what is leftover after the measure variance is removed) just picks up a lot of that noise that isn't related to anything else. If I am right about this, then it looks like I have evidence that the assessment isn't really measuring anything systematic and should probably be given a serious examination from the conceptual perspective before moving forward.

Any thoughts?

Mike.Linacre:

rag, have you looked at https://www.rasch.org/rmt/rmt81p.htm ? It indicates that one Rasch dimension = two CFA factors.

177. Ability strata

Raschmad January 1st, 2008, 5:45pm:

Hello folks,

Separation is the number of ability strata in a data set.

For example, a person separation index of 4 indicates that the test can identify 4 ability groups in the sample.

I was just wondering if there's a way to distinguish these 4 ability groups. Is there a way to (empirically) conclude that, for instance, persons with measures from say, -3 to -1.5 are in stratum 1, persons with measure between -1.5 and 0.5 are in stratum 2 and so on?

Cheers

MikeLinacre:

Thank you for your question Raschmad.

You write: "Separation is the number of ability strata in a data set."

Almost "Separation is the number of statistically different abilities in a normal distribution with the same mean and standard deviation as the sample of measures estimated from your data set." - so it is a mixture of empirical and theoretical properties, in exactly the same way as "Reliability" is.

You can see pictures of how this works at www.rasch.org/rmt/rmt94n.htm.

Empirically, one ability level blends into the next level, but dividing the range of the measures by the separation indicates the levels.

drmattbarney:

[quote=MikeLinacre]Thank you for your question Raschmad.

dividing the range of the measures by the separation indicates the levels.

Great question Raschmad; and excellent clarification Mike. The pictures were terrific. This seems very helpful for setting cut scores, whether passing a class, or pre-employment testing. Naturally, depends on the theory and applied problem one is addressing with Rasch measures, but am I on the right track?

Matt

MikeLinacre:

Sounds good, Drmattbarney. You would probably also want to look at www.rasch.org/rmt/rmt73e.htm

Raschmad:

Mike and Matt,

I had standard setting in mind when I posted the question.

However, I am not sure to what extent this can help.

Probably some qualitative studies are required to establishthe the validity of the procedure (Range/Separation).

Cheeers

jimsick:

Mike,

I'm reviewing a paper that reports a Rasch scale with a person reliability of .42 and a separation of .86. I'm just pondering what it means to have a separation of less than 1. From the Winsteps manual:

SEPARATION is the ratio of the PERSON (or ITEM) ADJ.S.D., the "true" standard deviation, to RMSE, the error standard deviation. It provides a ratio measure of separation in RMSE units, which is easier to interpret than the reliability correlation. This is analogous to the Fisher Discriminant Ratio. SEPARATION2 is the signal-to-noise ratio, the ratio of "true" variance to error variance.

If the ratio of the true variance to error variance is less than one, does that imply that the error variance is greater than the total variance (not logically possible)?

Or does "true variance" = true score plus error variance? Clearly, it is an inadequate scale, but does separation below one indicate that NO decisions can be reliably made?

regards,

Tokyo Jim

MikeLinacre:

Tokyo Jim: the fundamental relationship is:

Observed variance = True variance (what we want) + Error variance (what we don't want).

When the relability <0.5 and the separation <1.0, then the error variance is bigger than the true variance. So, if two people have different measures, the difference is more likely to due to measurement error than to "true" differences in ability.

In real life, we often encounter situations like this, but we ignore the measurement error. The winner is the winner. But the real difference in ability has been overwhelmed by the accidents of the measurement situation.

The 2009 year-end tennis tournament in London is a good example. The world #2, #3 and #4 players have all been eliminated from the last 4. Are their "true" abilities all less than the world #5, #7 and #9? Surely not. But the differences in ability have been overwhelmed by accidents of the local situation, i.e., measurement error.

C411i3:

Hi I am having some trouble interpreting my separation/strata analysis. I've got person separation values from 1.03 to 1.17, person strata values from 1.71 to 1.89 , and person reliabilities from 0.52 to 0.58 (depending on if extreme measures are included).

These values lead me to think that my test cannot differentiate more than 1 group, perhaps 2 at best. However, previous research has shown that this test (sustained attention task) can detect significant differences in performance in clinical groups (e.g., ADHD kids on- and off-medication).

I am having trouble reconciling the Rasch result (only 1 group) with what I know the test can do in application (distinguish groups).

The items on the test calibrate to a very small range of difficulties (10 items), which is why this result is produced. Is there another way to look at group classification that I am overlooking? Do these statistics mean this test can't really distinguish groups? Counter to other evidence

Any and all advice is appreciated!

-Callie

Mike.Linacre:

Thank you for your question, Callie.

Reliability and separation statistics are for differentiating between individuals. With groups, the differentiation depends on the size of the group. For instance, very approximately, if we are comparing groups of 100, then the standard error of the group mean is around 1/10th of the individual amount, and so the separation for group means would be around 10 times greater than for individuals.

579. Control file

ning April 13th, 2008, 6:12pm:

Dear Mike,

If I set ISGROUP=0, it's PCM that applies to all items...what if I want to run part of the items using RSM and part of the items using PCM using one data set in one analysis? How do I set up the control file?

Thanks

MikeLinacre:

Here's how to do it Gunny. Give each group of items, that are to share the same rating scale, a code letter, e.g., "R". Each PCM item has the special code letter "0" (zero). Then

ISGROUPS = RRR0RRR000RRRRRRRRR

where each letter corresponds to one item, in entry order.

ning:

Thanks, Mike, I should of known that by now...

ning:

Dear Mike, Could you please help me out on the following control file...what am I doing wrong? I'm not able to run at all in the Winsteps.

Thanks,

ITEM1 = 1 ; column of response to first item in data record

NI = 8 ; number of items

XWIDE = 3 ; number of columns per item response

ISGROUPS =RRRRRRR0 ; R=RSM, 0=PCM,

IREFER=AAAAAAAB

IVALUEA=" 1 2 3"

IVALUEB=" 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99100"

MikeLinacre:

There is something strange about this, Gunny. There is no CODES=. Perhaps you mean:

CODES = " 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99100..."

IVALUEA=" 1 2 3"

IVALUEB=" 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99100..."

ning:

Dear Mike,

You just saved my day...I've been staring at it long enough...

Thanks.

mukki:

I'm steal have problem to develop Control fine

MikeLinacre:

Mukki, what is your problem?

dachengruoque:

oh, learned a lot from the post as well, thanks a lot, dr linacre!

783. convert raw data to rasch scaled

danielcui March 31st, 2008, 1:53am:

hi, mike,

i am a rookie in rasch. i am interested in performing confirmatory factor analysis on rasch scaled data. where do i start? i use winsteps.thanks.

MikeLinacre:

Thank you for your question, Daniel.

Do you want to do CFA with Rasch measures as one of the variables?

Or do you want to do CFA on the observations that are used to estimate Rasch measures?

Or .... ?

danielcui:

hi, mike,

thanks for your response. i am validating a questionnaire using rasch analysis. however, i found there is only 40% variance explained by measures, when run the PCA. so i guess it's not in unidemensionality, therefore, i intend to use CFA on rasch scores to conclude the dimensions. please correct me if i am wrong.

daniel

MikeLinacre:

"only 40% ... so i guess it's not unidimensional"

The good news is that "only 40%" indicates that the person and item samples may be central. It does not indicate dimensionality.

For multidimensionality, we need the 40% and the size of the next explanatory component. If it is 20%, then the data are multidimensional, but if it is 2% then they are not ....

danielcui:

hi, mike,

the next component is 7.8%, would it suggest unidimensionality?thanks.

daniel

MikeLinacre:

No test (or even physical measurement) is perfectly unidimensional, Daniel. So the crucial question is "Does this degree of multidimensionality matter?" It did in measuring heat with the earliest thermometers, but it no longer does with most modern thermometers. Then the next question is "what are we going to do about the multidimensionality?"

7.8% would certainly suggest that you should take a look at the items loading on each end of the component. What is the substantive difference between them? Is the difference important enough to merit two separate measurements (like height and weight) or is it not worth the effort (like addition and subtraction).

You could also divide the test in two halves, correspond to the "top" and "bottom" items. Perform two analyses, then cross-plot the person measures from each half. What does it tell you? Are the differences in the person measures between the two halves big enough to impact decision-making based on the test? Which half of the test is more substantively accurate for decision-making?

danielcui:

hi, mike,

i looked at the two halved and found there is some different between each other, in terms of the item dimension. then, what could i next? thanks.

MikeLinacre:

Daniel, "there is some different between each other" - so the question becomes "Does the difference matter in the context of the test?" This is a substantive question about the content of the test. Should there be two test scores or measures reported for each person, or is one enough?

danielcui:

thanks, mike, i guess it need to be separate into two measures.

danielcui:

Hi Mike,

There is IPMATRIX output file in Winsteps. When intend to apply Rasch scaled data in factor analysis, should I use expected response value or predicted item measure? thanks.

MikeLinacre:

What is the purpose of your factor analysis, Daniel?

If we use the "expected responses", then we are factor-analyzing Guttman data. Our factor analyis should discover factors related to the Rasch dimension and the item and person distributions.

If we use the predicted item measures, then we are removing the item difficulties from the raw observations, so the factor analysis will emphasize the person ability distribution. But I have never tried this myself. It would be an interesting experiment, perhaps meriting a research note in Rasch Measurement Transactions.

danielcui:

Hi Mike,

Thanks for your quick reply.

My research is about to refine a questionnaire in quality of life. My intention using Rasch is to convert the response of the questionnaire into interval data, then use factor analysis to identify domains of the construct. Thanks to your online course,I have completed item reduction and category collapse, now I need know the response pattern from the sample subjects,which is the domain of the construct, according to my subjects sample.

I reckon what I mentioned two parameters in the post might not be appropriate, I tried "predicted person measure" instead, which seemed reasonable with the results.( see in the attachment) What do you think? Thank you.

Cheers,

Daniel

MikeLinacre:

There are two factor-analytic approaches to dimensionality (factor) investigations, Daniel:

1. Exploratory common-factor analysis (CFA) of the observations. This looks for possible factors in the raw data. The expectation is that the biggest factor will be the Rasch dimension, and the other factors will be small enough to ignore.

2. Confirmatory principal-components analyis (PCA) of the residuals. The Rasch dimension has been removed from the observations leaving behind the residuals. The PCA is intended to confirm that there are no meaningful dimensions remaining in the residuals. Thus the Rasch dimension is the (only) dimension in the data.

The analysis of "predicted person measure" is closer to CFA of the observations, but with the observations adjusted for their item difficulties. An analysis of data with known dimensionality would tell you whether this approach is advantageous or not.

danielcui:

Hi Mike,

Thanks for clarify the definitions. Sometimes people use CFA as abbreviation for comfirmatory fact analysis and EFA for exploratory factor analysis.

One advantage of Rasch analysis is to convert ordinal data to interval ones. In my case, I try to use Rasch analysis convert the ordinal response "very happy", "happy", "not happy"into interval data so I can use them for linear data analysis. Does predicted person measures provide this information? It looks as give each item with a calibrated measures from each respondent.Please correct me if I am wrong. Thank you.

Daniel

MikeLinacre:

Apologies for the confusion about abbreviations, Daniel.

Rasch converts ordinal data to the interval measures they imply. We can attempt to convert the ordinal data into interval data by making some assumptions.

If we believe that the estimated item measures are definitive, then we would use the predicted person measures.

But if we belive that the estimated person measures are definitive, then we could use the predicted item measures.

This suggests that "predicted person measure - predicted item measure" may be a more linear form of the original data.

danielcui:

thank you so much, mike.

811. The mean measure for the cented facet is not 0

Jade December 28th, 2008, 5:31am:

Dear Mike,

I am doing a Facets analysis of rater performance on a speaking test. I non-centered the rater facet since it is the facet I am interested. The output, however, shows the mean measure for the Examinee facet is .16. I was expecting a 0 for the centered facets such as examinee. I did get a 0 on the item facet. Could anyone here help to explain why the mean measure is .16 not 0 for the centered facet of examinee? My model statement is like this,

Facets = 3 ;3 Facets Model: Rater x Examinee x Item

Non-center = 1

Positive = 2

Pt-biserial = Yes

inter-rater = 1

Model = #,?,?,RATING,1 ;Partial Credit Model

Rating Scale = RATING,R6,General,Ordinal